Today we are taking a look at Intel’s new high end Ivy Bridge chip, the Core i7-3770K. We are specifically focusing on the GPU side of the Ivy Bridge equation in this article, simply because the CPU side of Ivy Bridge is a well known quantity. Anandtech did an unsanctioned preview in early March which suggested that CPU performance would rise five to fifteen percent over Sandy Bridge and our admittedly anecdotal testing seems to confirm a CPU side bump in that range. The real question that we want to answer is whether or not the HD 4000 can make ends meet as a decent graphics solution for gaming. As such the scope of this article is limited to evaluating only the merits Intel’s HD 4000 graphics. Thus don’t expect to see any numbers comparing Ivy Bridge to Sandy Bridge.

When I first started testing the Core i7 – 3770K, I was pleasantly surprised by the level of graphics performance that it brought to the table. I for one remember trying desperately just a few years ago to get EA’s Spore to run on a Core 2 based laptop with Intel’s 945GM integrated graphics chipset. Needless to say, Intel’s HD 4000 is an improvement in leaps and bounds over the graphics chips they were producing during the Core 2 era.

Our Favorite Slides

With that in mind let’s take a look at some of the sides that Intel sent us. Note: Slides edited for brevity and focus.

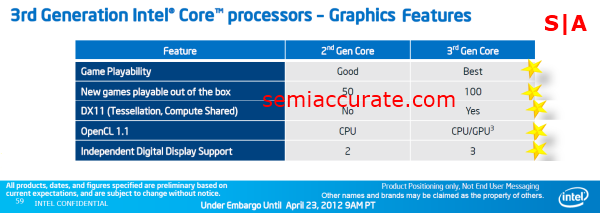

What we are looking at here is selections from Intel’s own comparison between its last generation HD 3000 parts and its now current generation HD 4000 parts. Intel is using its tried and true four-letter-word marketing strategy to (over)simplify the differences in performance between Sandy Bridge and Ivy Bridge with the adjectives “Good” and “Best”. We can also see that by Intel’s own measure only fifty games were actually playable out of the box on their HD 3000 graphics part. How many video games are available in the Steam library alone? Over 4500 at last count, so while it’s gratifying to see that Intel now supports 100 games with the HD 4000, it’s still a bit depressing to run the numbers and see that at Intel’s current rate of progress it’s going to take them at least the rest of the decade to offer support for the all of the games available today. And while I’m sure that won’t end up being the case due to competitive pressures, I would like to point out in the nicest of ways, that Intel really needs to get its act together on the graphics driver front. 100 games is better than last year, but still not good enough.

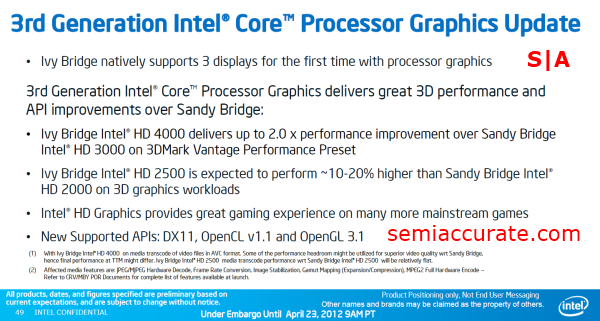

One of the biggest changes between Sandy Bridge and Ivy Bridge is DirectX 11 support. This is a big step forward for Intel who has been notably lagging behind the competition in terms of “check box” GPU features. Now it should be noted that Intel is still behind in terms of DirectX feature sets, as Nvidia and AMD have just moved onto DirectX 11.1. But at the very least there is now a graphics chip from Intel that fully supports one of Windows 7’s biggest launch features, DirectX 11. Another big change is OpenCL 1.1 support for Intel’s GPU. In its simplest form this allows Intel’s graphics EUs to be used for general purpose compute tasks. Needless to say GPU based OpenCL support is just another one of the ways that Intel is closing the gap with AMD’s APUs. In a very practical sense you can look at Intel’s support for OpenCL as a step towards AMD’s vision of a Heterogeneous System Architecture. The thing about good ideas, like the integrated memory controller, and now GPU based OpenCL support, is that they tend to spread.

The last big feature that, other than performance, that Intel’s bringing to the table, graphics-wise, with Ivy Bridge, is Eyefinity–err, 3D Vision Surround–err, support for three independent digital displays. Yeah, Intel doesn’t have the most catchy name for triple monitor support, but they are offering it. Unfortunately our Z77 review motherboard only has one HDMI port, thus we are short the Display port and splitter we’d need to test out Intel’s triple monitor support. Hopefully we’ll be able to investigate that technology in the future.

The overarching take-away from this is that the progress that Intel has made on supporting current industry standards is good; but when we look at the competitive landscape it’s obvious that there is still some room for improvement. Haswell anyone?

Moving over to the performance side Intel is promising up to double the performance of Sandy Bridge’s HD 3000. That kind of performance would put it about on par with AMD’s Llano APU. That’s a pretty large gap to close in just one generation, but stranger things have happened. In any case we won’t be comparing Sandy Bridge to Ivy Bridge or Llano in this article, but it is interesting to know what Intel’s aiming for. Another data point worth noting is Intel’s claim that the GT1, or HD 2500, variant of Ivy Bridge will offer 10 to 20 percent better performance than the GT1, or HD 2000, variant of Sandy Bridge. The HD 2000 didn’t receive a lot of press coverage compared to the HD 3000 and as such the HD 2500 probably won’t get as much coverage as the HD 4000 part we are looking at, but it is nice to know the floor for Ivy Bridge graphics performance is higher than that of its predecessor.

Looking at the Drivers

Let’s look first at the software side of Intel’s HD 4000. Here we have Intel’s graphics control panel from the pre-release drivers that they supplied for review. As graphics control panels go Intel’s has probably the nicest looking user interface you’ll find, but it also happens to offer the least functionality of any current generation control panel that you’ll find. Of course that’s not to say that all of the basic settings aren’t there; you can pick your display, your resolution, color depth, and refresh rate. But at the same time you can do these tasks using only Microsoft’s rather rudimentary control panel. To Intel’s credit they have made it possible for you to create and save settings profiles, but unless you’re working with a multi-monitor set-up or constantly swapping back and forth between displays you’ll find custom profiles of little use.

Taking a look at the 3D settings in Intel’s Graphics Control Panel we can see that the options are pretty limited compared to the settings we’re used to in Nvidia’s control panel or AMD’s Vision Engine Control Center. We can chose from three pre-sets; performance, normal, and quality. We can opt out from using these pre-sets by checking the custom settings box. The custom settings options allow us to do two things. First we can force a certain level of anisotropic filtering, and second we can force Vsync. And then there’s the somewhat mysterious “Application Optimal Mode”. It should be noted that all of the testing in this article was done with the control panel configured as it’s shown in these screen shots, which means that we left application optimal mode enabled. I chose to leave that setting enabled because it was enabled by default, and as such will most likely be what you’ll find out in the wild.

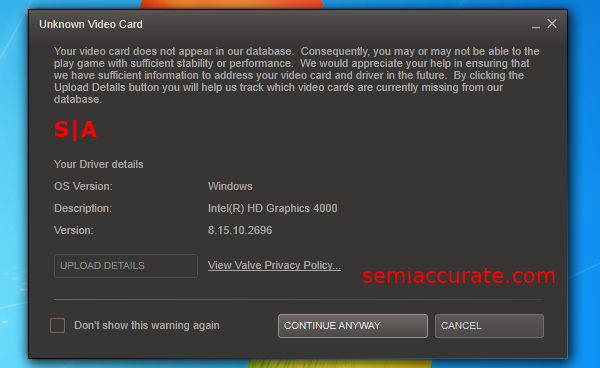

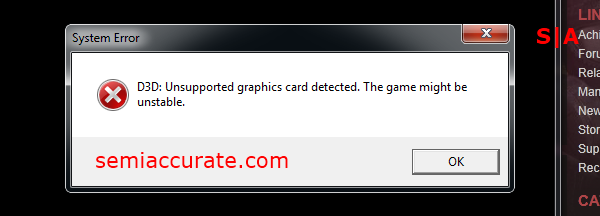

Throughout our testing one thing became increasingly apparent to us; the world isn’t ready for Intel’s HD 4000. I say this because we ran into issues, or at least some peculiarities, in about half of the games we tested. A great example of this lack of preparedness is the prompt that would pop up every time we ran a Source engine based video game.

As you can see Steam didn’t know how to handle the HD 4000. Now admittedly all of our testing was done prior to the actual launch of these parts. But it still stands to reason that Intel would, out of a sense of self interest at the very least, let Valve know that the HD 4000 was coming.

Putting the HD 4000 Through the Wringer

The goals that I had in mind while doing my testing were pretty straight forward. Set the game’s resolution to my monitors’ native resolution of 1920 by 1080, which I’ll refer to as 1080P throughout the article for the sake of simplicity. And then find the highest graphical settings that a given game will support while still maintaining playability. At the same time I wanted to keep an eye out for any issues on the HD 4000 that a user might encounter while trying to play these games.

Deus Ex: Human Revolution

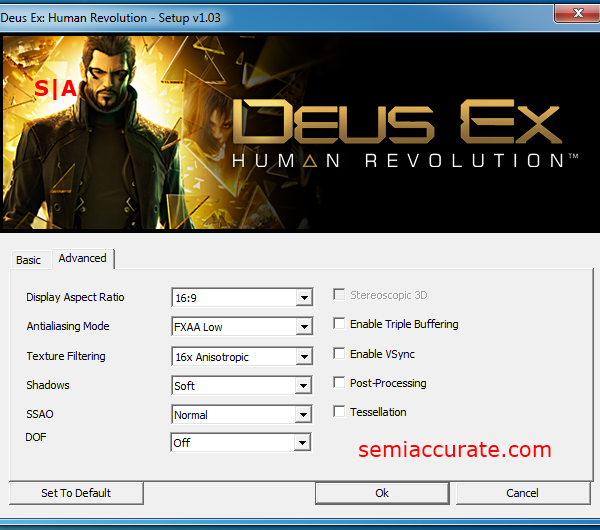

The first game I tested Ivy Bridge with was last year’s Deus Ex: Human Revolution. Now Deus Ex isn’t a particularly demanding game, but at the same time it does use a number of high quality graphical abilities, like SSAO, DX11, Soft shadows, Post processing, and Tessellation. Additionally, it’s a game that falls under the banner of AMD’s Gaming Evolved program. With that in mind I turned off all of the advanced features with the exceptions of Soft shadows and the DX 11 rendering path; I also set the in game resolution to 720P. The game, while appearing to be visually quite unattractive, due to the reduced quality settings, was completely playable. So I started to dial up the game’s graphical settings looking to find the highest quality settings that were still reasonably playable. What I ended up with was pretty surprising to me really; I was able to bump my resolution up to a full 1080P, turn on ‘Normal’ SSAO, and even apply the games FXAA ‘Low’ option for Anti-Aliasing. The biggest sticking point I ran into was trying to increase the SSAO setting from ‘Normal’ to ‘High’ which would cause the game to immediately become choppy and unplayable. Despite this boundary, Intel’s HD 4000 performed admirably in Deus Ex, where it was able to spit out frames at 1080P, in DX11, and with a little anti-aliasing to boot.

Here we have a screen shot of Deus Ex running on the HD 4000 scaled down from 1080P. As you can see, even at these reduced quality settings it’s still a decent looking game. Additionally we don’t have any graphical glitches to report.

Company of Heroes

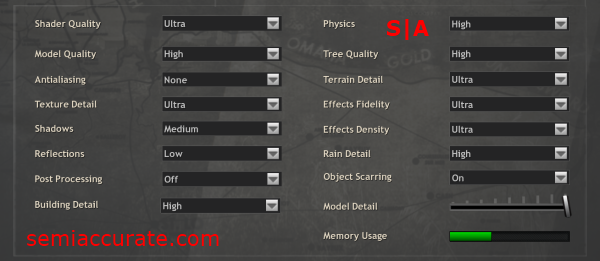

The next game in our suite is Relic’s older real time strategy title, Company of Heroes. Considering how old Company of Heroes is, it continues to amaze me how demanding it can be. The game offers DX10.1 support through the “Ultra” shader quality option as well as a ton of individually configurable graphical settings. Now Company of Heroes was part of Nvidia’s TWIMTBP program, but opted to use Havok’s physics engine over Nvidia’s own PhysX, which is one of the reasons why we’re able to dial up the physics settings to their max, without cratering our frame rates.

I started my testing by setting the resolution to 1080P, and then turning up all of the graphical settings up to their maximum’s, but leaving Anti-aliasing off. Then I ran the in game benchmark and adjusted the games settings down until average frame rates were about 30 frames per second, which was the point at which the game seemed reasonably playable to me. I ended up dropping a few settings down a notch namely, post processing, reflections, and shadows. But once I had made those changes the game skipped smoothly along at 1080P, running what I would consider to be high detail settings. At this point I think that it’s fair to say that Ivy Bridge is proving itself, at least in its highest end HD 4000 incarnation, to be a surprisingly capable gaming platform.

This is a screen shot from our Company of Heroes benchmark running on the HD 4000. As you can there are quite a few units on the map, as well as explosions and debris. Despite this Intel’s HD 4000 was able to put out playable frames the entire time.

Counter-Strike: Source

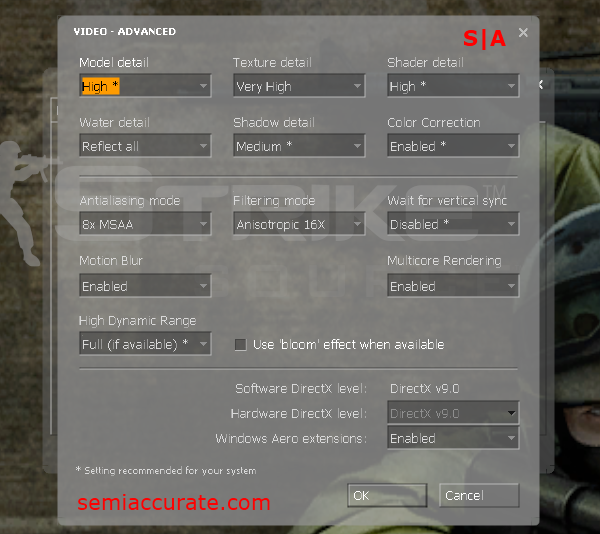

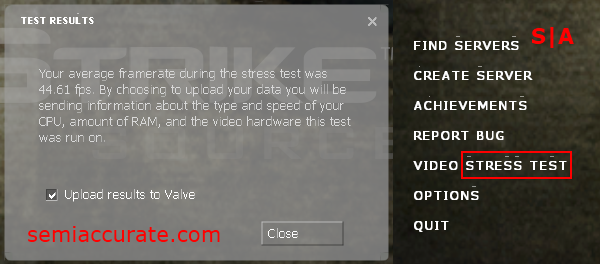

Moving on to Valve’s Counter-Strike: Source, I found that the HD 4000 was able to max out all of the graphical settings, including 8x multisample anti-aliasing, at 1080P. Running the in-game benchmark gave us an average frame rate of about 44 frames per second. Now it’s important to remember that this game uses an older version of Valve’s Source engine, and runs in DX9. But at the same time it’s worth noting that back in 2008, I use to play this game on an integrated graphics chipset called the Geforce 6150 at almost all low settings and at a mere 1024 by 768 resolution. Now, only four years later, I can max the game out at 1080P on Intel’s HD 4000; that’s quite an improvement for “integrated” graphics.

While the game itself was completely playable I did notice a rather weird problem with the way the HD 4000 was rendering text in Counter-Strike. If you look at the menu options you can see white dots above every letter “c” and white bars above every letter “s”. Obviously there appears to be some sort of problem that the HD 4000 encounters when trying to render text properly in this game. I looked into this problem a bit deeper and found that disabling anti-aliasing from Counter-Strike’s in-game menu fixed this text corruption problem, and that dropping the level of anti-aliasing down from 8x MSAA to 4x or 2x had no effect on the problem. This knowledge presents the user with a bit of trade off, do you want corrupted text or smooth edges? For the purposes of this review we chose smooth edges over non-corrupted text in an attempt to strain the HD 4000 to the greatest extent possible.

Metro 2033

Metro 2033 is a game that caused me quite a bit of trouble when I was trying to tune it. The Depth of Field filter that this game automatically enables when you run it in DX11 mode, as other reviewers have noted, basically takes a baseball bat the knees of your frame rates. Disabling it allowed us to run the game in DX11 mode, with tessellation enabled, and at the normal quality preset without antialiasing. At these settings the game still looked pretty good, which is more than we can say for other games, namely Crysis, that lose a fair bit of their graphical edge when you start dropping their settings down from high. The caveat though, is that frame rates were low, think in the mid teens of frames per second. Having beat nearly a third of the game during a testing “break”, I can attest to the game being completely playable and without any kind of input lag that is usually found when you run a game at such low frame rates. At the same time though, so while I thought the game was perfectly playable, you might feel differently if you were to judge it solely by the frame rate.

This is a screen shot from Metro 2033 at the quality settings that we listed above, running once again on Intel’s HD 4000 graphics. As you can see the game still looks pretty good, despite using only the “normal” preset the game has maintained most of its visual atmosphere.

One annoyance that did leave us a little shaken about playing Metro 2033 on the HD 4000 was this error that would pop up every time we launched the game. Luckily we didn’t actually experience any issues due to using an “unsupported graphics card”, but this warning was a bit off putting, and something you wouldn’t encounter on one of Nvidia’s or AMD’s GPUs.

With that out of the way we come to a momentary intermission in our review of the HD 4000. You can find part two of the review here.S|A

Thomas Ryan

Latest posts by Thomas Ryan (see all)

- Intel’s Core i7-8700K: A Review - Oct 5, 2017

- Raijintek’s Thetis Window: A Case Review - Sep 28, 2017

- Intel’s Core i9-7980XE: A Review - Sep 25, 2017

- AMD’s Ryzen Pro and Ryzen Threadripper 1900X Come to Market - Aug 31, 2017

- Intel’s Core i9-7900X: A Review - Aug 24, 2017