One thing you’ll have noted from our earlier article on the full stack of EPYC SKUs is that the TDPs on these parts are pretty high even by desktop standards. Compared to the Intel E5 SKUs they compete against they look more reasonable. But to keep the focus on EPYC let’s delve into why AMD’s official TDP numbers might be particularly misleading.

UPDATE 6/20/17 @ 5:09 PM CT: This article ended up getting published before the article detailing the SKUs. That article will arrive shortly. Enjoy!

EPYC implements the same Zen core as the desktop Ryzen parts that we’ve come to know rather well over the past few months.

A rudimentary comparison of AMD’s most efficient 8 core Ryzen SKU, the R7 1700, a 65 Watt TDP part and AMD’s most power efficient 8 core EPYC SKU, the 7251, with a 120 Watt TDP might lead one to believe that on a per core basis AMD’s Zen has become twice as power-hungry as it’s moved from the desktop into the server space. Obviously this a misleading comparison for a variety of reasons all of which we’re going to discuss now.

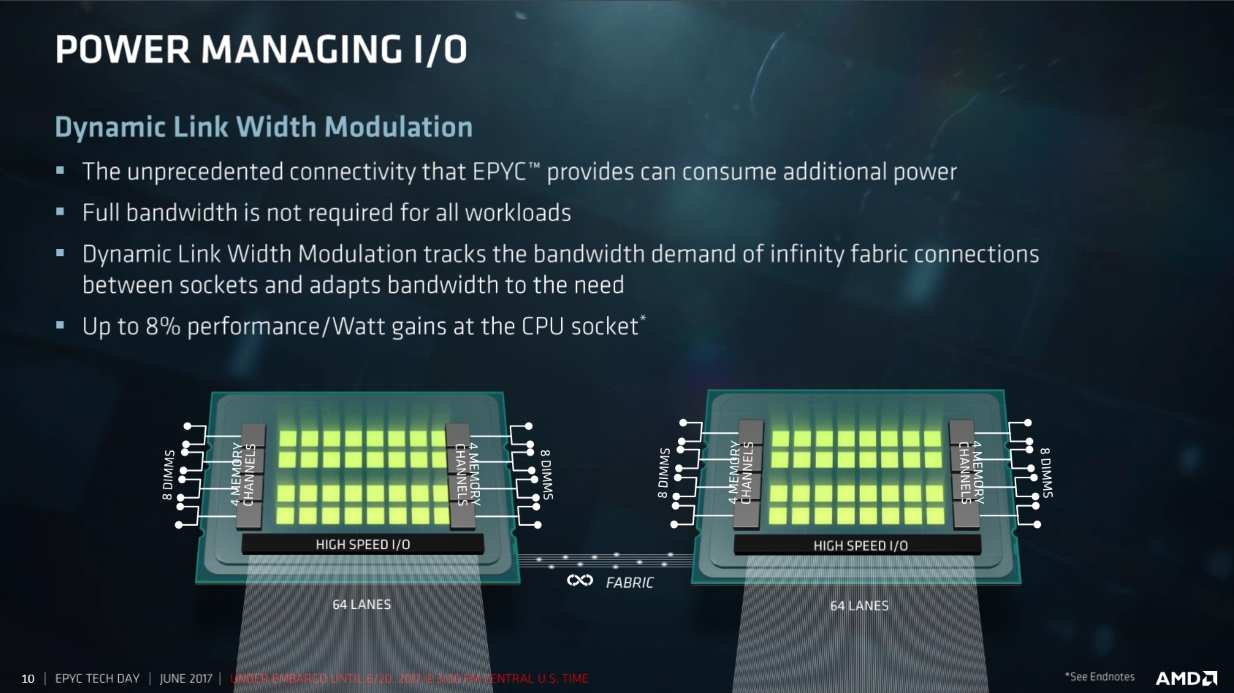

What are the high level differences between Ryzen and EPYC? To start with every Ryzen chip uses a single 8 core die. Every EPYC chip requires four dies. Each EPYC chip offers four times more memory channels than a Ryzen part and more than five times the number of PCI-E lanes. EPYC’s design also puts a constant load on AMD’s Infinity fabric to move data between dies and across sockets. All of these factors add up to a chip where conceivably more than half the power envelope could be devoted to I/O rather than core power consumption under load.

From a power efficiency perspective moving data around is a great way to burn power. This is why we often see a focus on increasing cache sizes and reducing the need to physically move data around inside of modern chips. Given this point of reference one could see EPYC’s epic focus on I/O as a conscious attempt to build a chip that burns most of its Watts not on number crunching but on data movement.

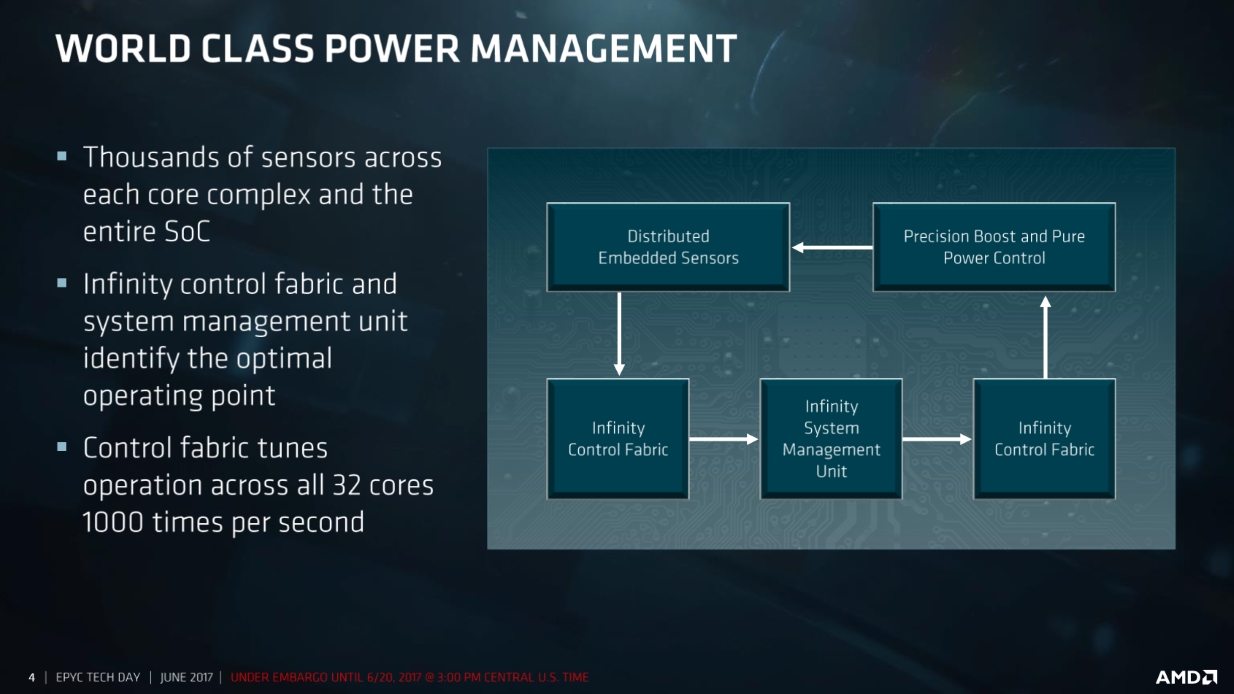

Luckily AMD isn’t letting its cores or I/O run wild. Unsurprisingly the company has developed a pretty complex scheme for managing the power versus frequency curve and powering down I/O blocks as necessary. The basis for this power management system is a network of sensors that AMD’s designers have placed throughout the chip that can provide real-time information to the control portion of AMD’s Infinity fabric. Then the control fabric and the Infinity System Management Unit work together to define the optimal operating point on curve for a given core to run at. Inside of EPYC this tuning process occurs every millisecond.

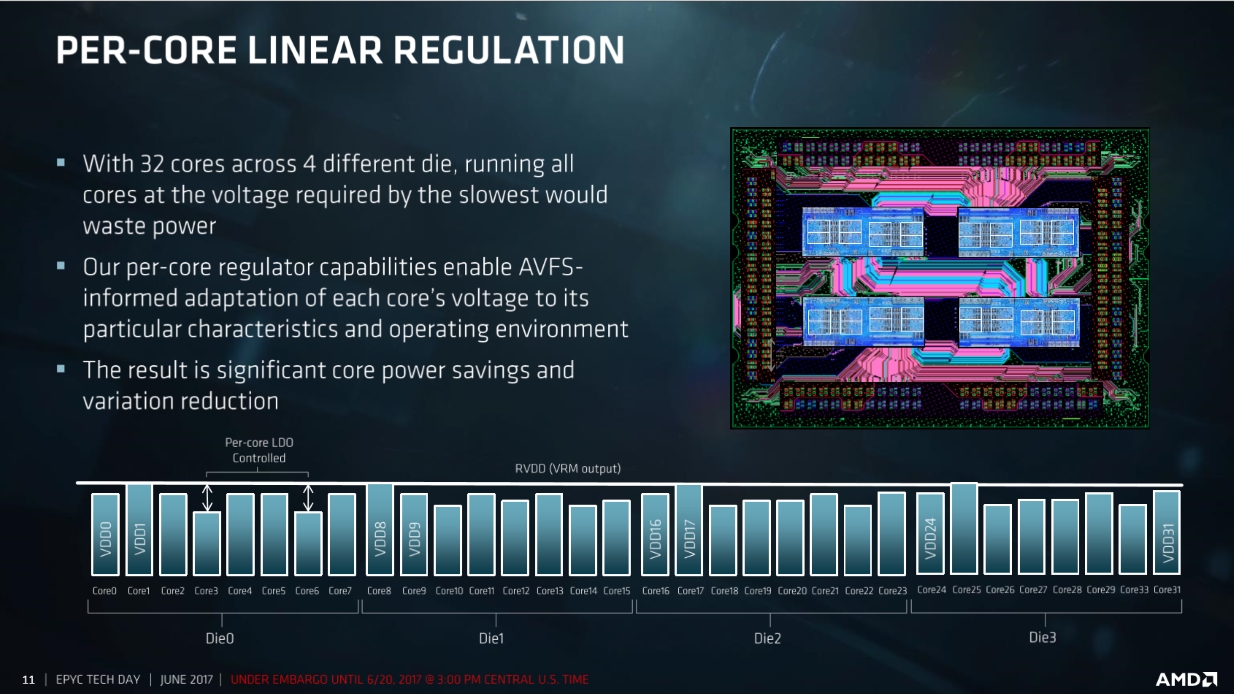

One of the coolest things that AMD’s doing with EPYC is voltage regulation on a per core basis. This helps by enabling AMD’s power management system to pick the right place on the power versus frequency curve while considering the characteristics of each individual core rather than just picking a baseline that works for all of the cores. Not all cores are created equal and with per core voltage regulation AMD is actively exploiting those variations.

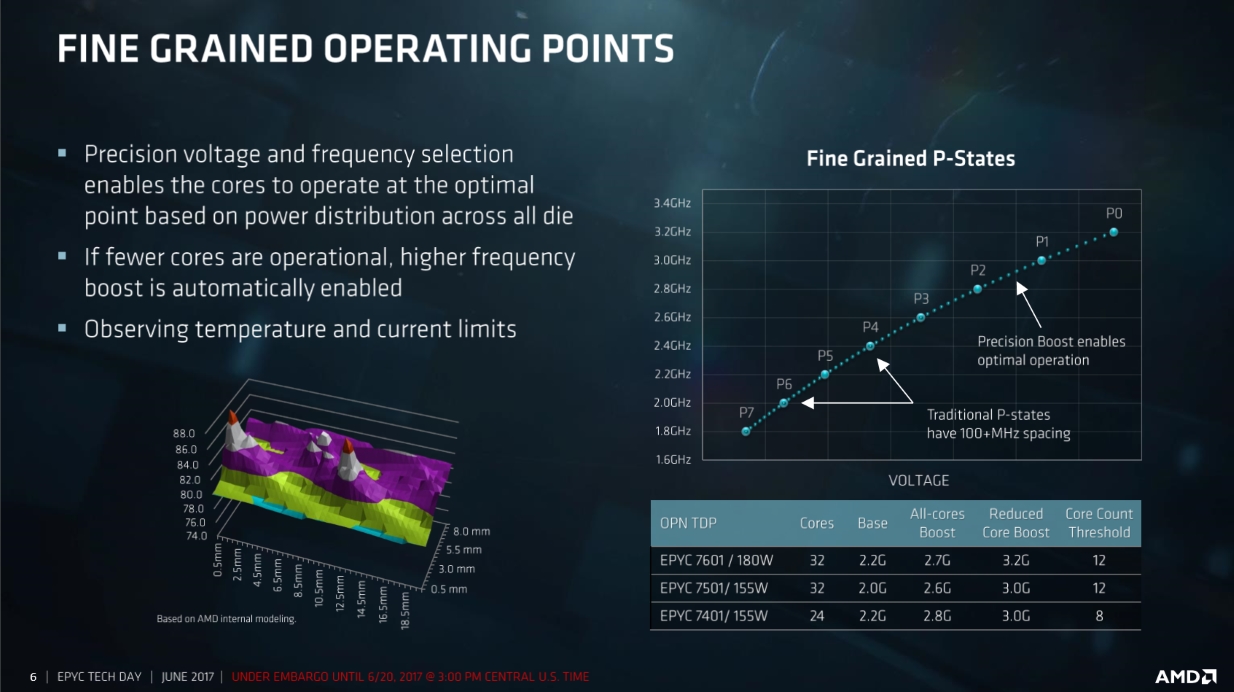

Just like its desktop Zen parts AMD’s EPYC has three clock rates of note: the base clock, the all core boost clock, and the reduced core count boost clock. Precision Boost’s fine grained steps allow AMD to move as necessary between these three states. It’s worth noting that on AMD’s top SKUs the all core boost is in the high 2.x Ghz range and reduced core count boost is at or just above 3 Ghz. To enable the reduced core count boost states 12 or less cores have to be active on AMD’s 32 core models.

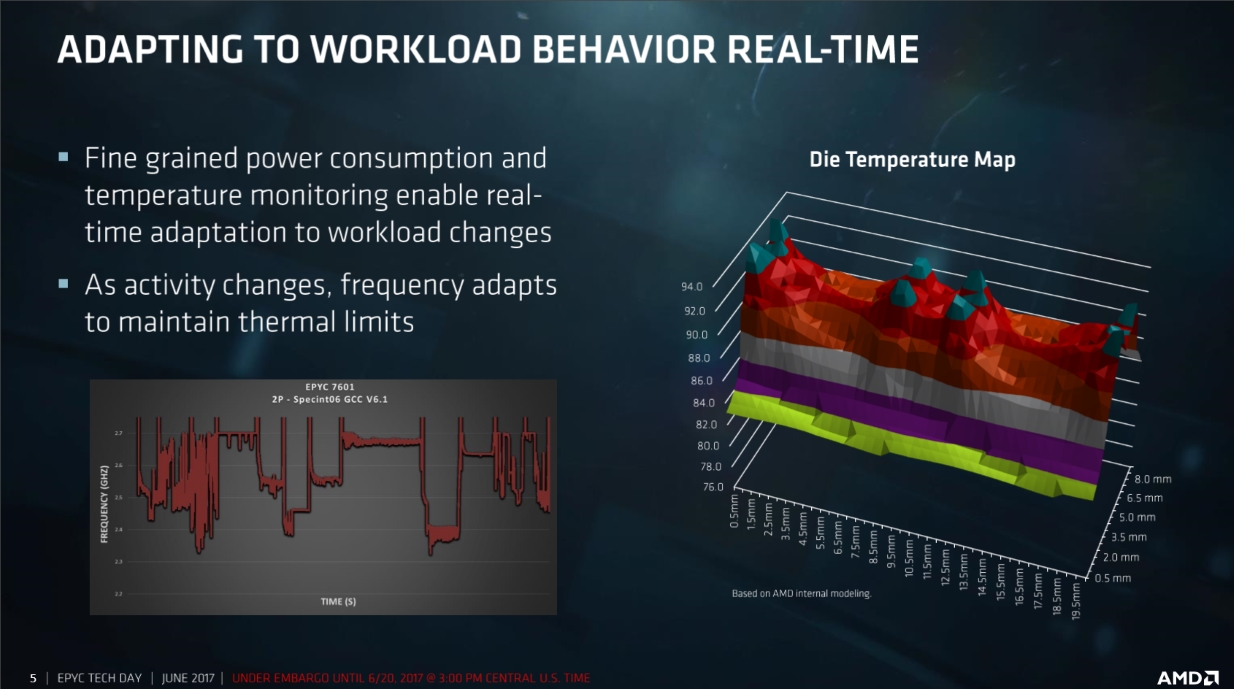

Compared to AMD’s consumer oriented offerings the clock rates on its EPYC chips are about 25 percent lower. According to AMD its official base clocks, which are down in low 2.x Ghz range, are a bit misleading though. Thanks to the capabilities of EPYC’s power management system like the pure power and precision boost features the company believes that its EPYC chips will spend most of their time in a boosted state. In the slide above AMD’s provided a graph of clock speed over time in the SPECint benchmark where its EPYC chip never actually touches its rated base clock speed.

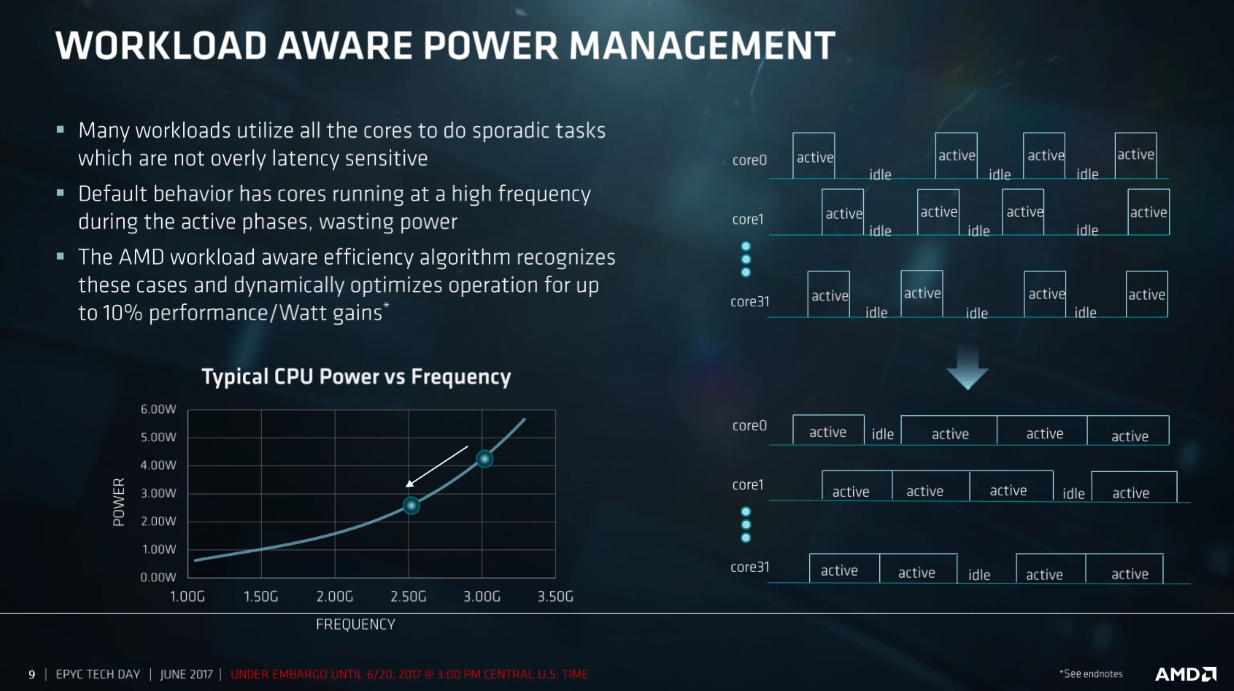

The race to idle is an often cited concept when architects like to discuss power efficiency issues. AMD is continuing to refine its approach to power management with workload awareness. In practice this means that AMD’s power management will opt to keep a workload running for longer if it means that the core can stay in a more efficient place on the power versus frequency curve as compared to boosting all the way up and completing the workload as quickly as possible. This has a couple of benefits: first off it improves performance per watt, but it also reduces the thermal impact of a given workload by spreading it out over time and reducing spikes.

This is an interesting update to a technology that we first saw on Carrizo. At the time AMD was using workload awareness to avoid boosting its cores for workloads where higher clock rates didn’t meaningfully improve performance. This new iteration of workload awareness takes that logic a step further.

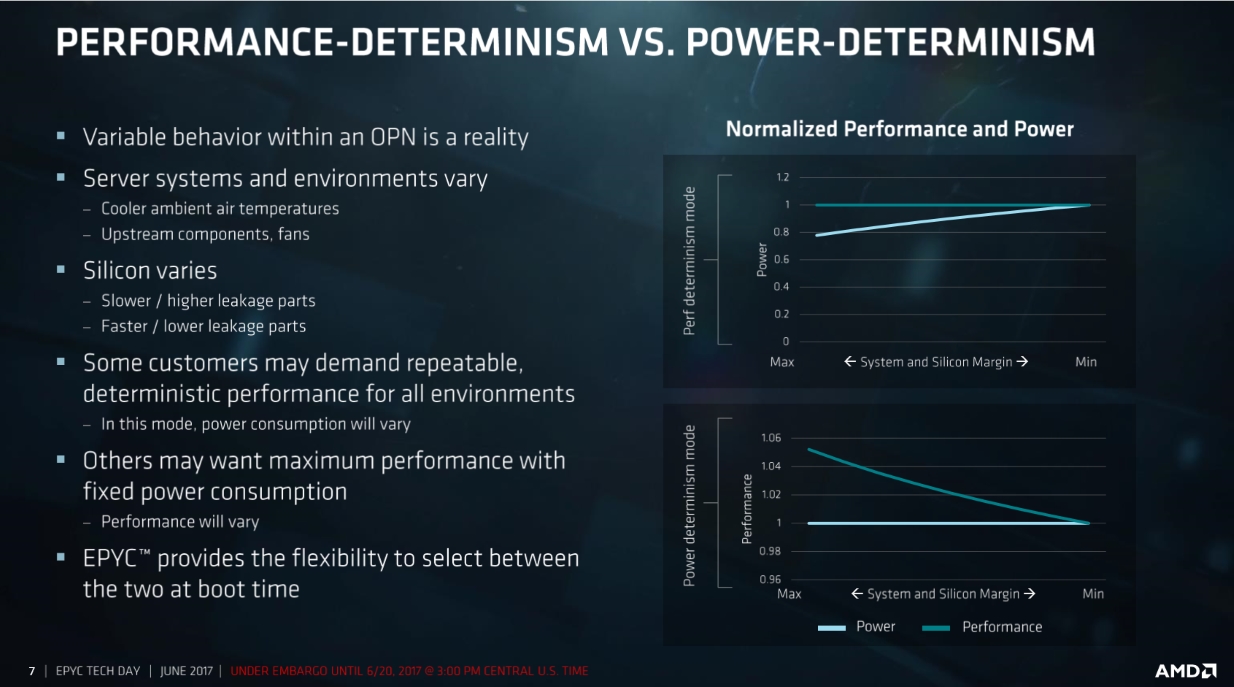

Of course maybe you’re not into all this EPYC performance variably. That’s why AMD’s developed two new options for its customers that they can enable at boot. A mode where a consistent level of performance is maintained and a mode where a consistent level of power consumption is maintained. Essentially AMD is giving its customers the ability to pick their favorite variable on the power versus frequency curve rather than accepting what AMD defines as the sweet spot between the two.

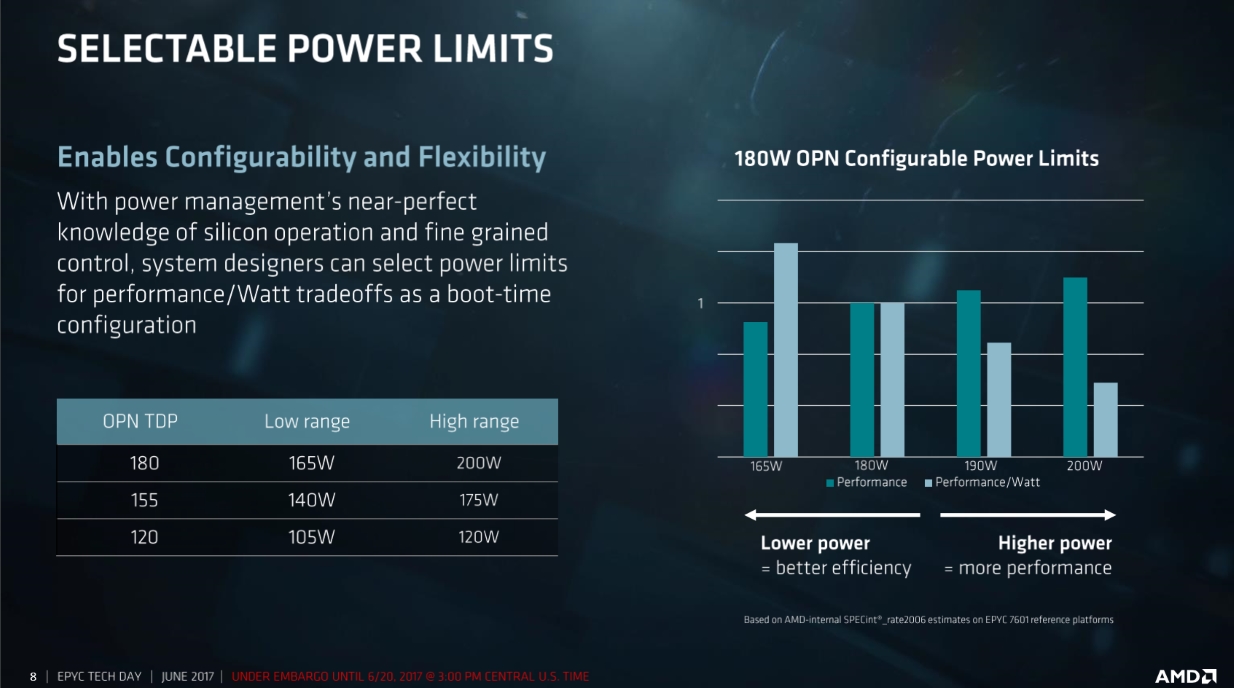

Extending down from that line of let-the-customer-choose logic are EPYC’s selective power limits. It turns out that the TDP ratings that AMD’s given us are really just suggestions. Based on the official TDP of SKUs customers will be able to configure the SKU at boot time to draw either 20 Watts more power on the high-end or 25 Watts less power on the low-end. The only TDP band where this doesn’t apply is on the entry-level 120 Watt chips which an only be configured to draw up to 15 fewer Watts than its rated TDP. Configurable power envelopes on AMD’s EPYC are at the same time pretty cool and a shade confusing in that rated TDPs have become even less useful than they were before.

Finally we have AMD’s answer to the specter of EPYC’s massive I/O eating up the chip’s power budget. AMD calls it dynamic link width modulation and that’s actually a pretty good description. Essentially this is a feature that asks if you really need all those PCI-E lanes to be active, and if the answer is no, then it powers down the lanes that aren’t being actively used for something. This is a smart way to address idle or lightly loaded scenarios where all that I/O capacity would likely just be burning power rather than doing useful work.

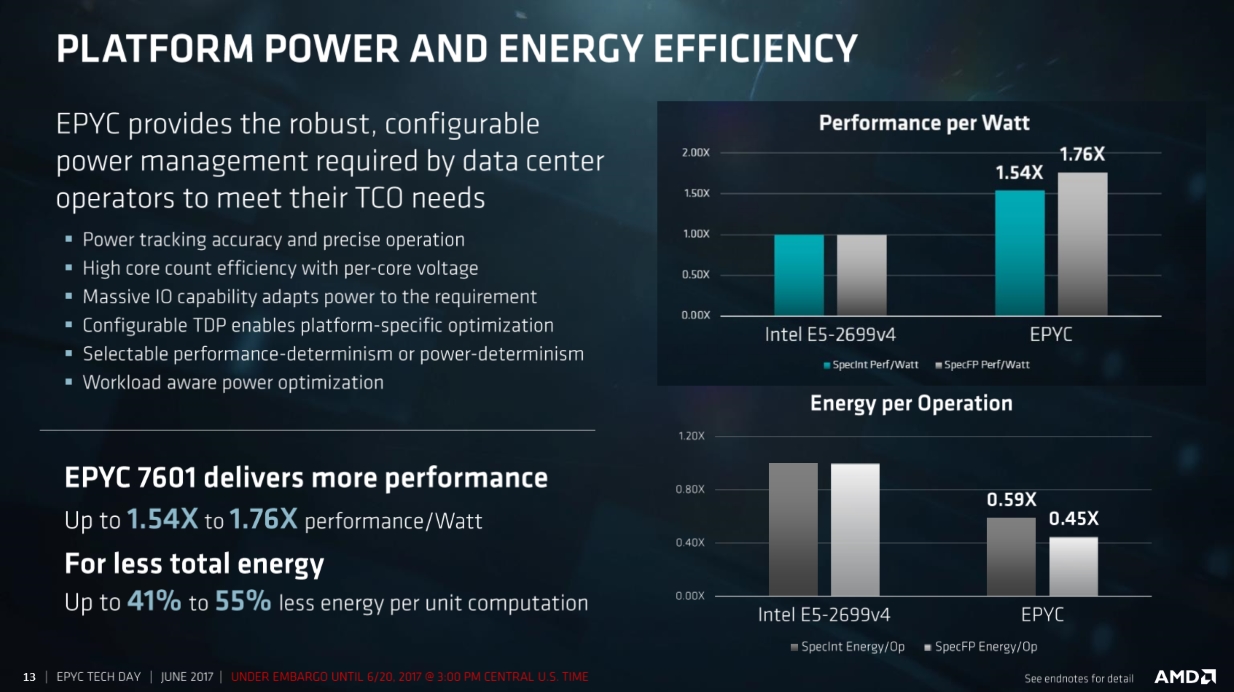

The outcome of all these different power management capabilities and tactics is an EPYC chip that AMD believes can offer between 50 percent and 75 better performance per watt than similarly performing parts from Intel. This is a pretty bold claim on from AMD and hopefully third-party testing in the coming months will be able to validate it.

One thing AMD did make very clear to us during their presentations is that EPYC wasn’t designed to compete against Intel’s current crop of Xeon E5 products. Rather it was designed to compete against what Intel will be bringing to market next. If these performance per watt numbers are to be believed then Intel’s certainly going to need something new to reclaim the performance per watt crown.

In any case the power efficiency technologies inside of AMD’s EPYC CPUs are laudable. AMD still has a big mountain to summit in terms of market share but with EPYC they, at the very least, have the right climbing gear.S|A

Thomas Ryan

Latest posts by Thomas Ryan (see all)

- Intel’s Core i7-8700K: A Review - Oct 5, 2017

- Raijintek’s Thetis Window: A Case Review - Sep 28, 2017

- Intel’s Core i9-7980XE: A Review - Sep 25, 2017

- AMD’s Ryzen Pro and Ryzen Threadripper 1900X Come to Market - Aug 31, 2017

- Intel’s Core i9-7900X: A Review - Aug 24, 2017