![]() One of the most awaited Silicon Valley startups called Soft Machines just broke cover with their VISC architecture. Lets take a look at this method of virtualizing CPU resources for higher IPC.

One of the most awaited Silicon Valley startups called Soft Machines just broke cover with their VISC architecture. Lets take a look at this method of virtualizing CPU resources for higher IPC.

Soft Machines is attacking a problem that should be familiar to most geeks, the power/performance walls. This problem is also known as the death of CPU scaling and explains why CPUs cores have gone from 100% scaling per generation to the meager 10% or so they get now. To get more performance the complexity of current OoO machines increases far more than linearly. Complexity roughly equals power use so a device ends up burning a multiple of the power of its predecessors for a fractional IPC gain.

Soft Machines is attacking this problem in a novel way with an architecture they call VISC, the V stands for Virtual/Virtualized. The idea is to have virtual cores that apportion resources dynamically based on the current workload, and re-apportion things on the fly as that load changes. This will allow the hardware to effectively be tailored for the software rather than the other way around. Everything starts at the Global Front End (GFE).

VISC stack from a high level view

The GFE does what a normal CPU’s front end does but the end result is different. Instead of shuttling ops to the respective execution units/pipes, the GFE makes ‘threads’. We put that word in quotes because these are not the kind of threads you are thinking of from an OS sense, they are invisible to the software running above them. Threads in this case are independent but not necessarily completely self-contained chunks of instructions that normally number less than 100 or so. What they are and how long really depends on the workload itself and decisions made by the GFE.

These threads are then dynamically allocated to hardware resources based on the needs of the thread, this hardware is a virtual core. If you have a ‘heavy’ thread it gets more of the appropriate resources, a light thread will get less, but in theory both should get what they both need and will use. You can see the power and performance benefits here, the optimal case is where the hardware is perfectly aligned with the software and nothing is unused.

Soft Machines puts much of that allocation work in up front in the GFE to make sure the rest of the core is utilized more fully. This isn’t to say that their core is perfect and works magic, there undoubtedly can be conflicts which don’t allow the GFE to keep everything running 100%. As a side note, the load balancing scheme is said to be ‘perfect’ because a thread can be allocated between 0-100% of the resources from the two cores assigned to them, at least in this architecture there are no weird constraints.

VISC prototype device test results

The current Soft Machines prototype is a dual core device meaning there are two virtual cores and an unstated number of underlying resources. The company has simulated four cores and they have studied up to eight virtual cores. They believe the architecture can scale to high core counts if needed to give ‘server class’ performance. The hardcore chip architects among you will probably realize that this means the GFE is not the only point of resource allocation, it doesn’t bottleneck like a crossbar or other single complex decision-making point would, nor does it appear to hit a scaling wall.

This is because the resource allocation goes far beyond mere execution units. Memory, state, memory consistency, preciseness of exceptions, ALUs, busses, and almost everything else is virtualized. VISC is not a simple case of thread 1 gets 1 INT and 2 FP pipes while thread two gets 3 INT and 1 FP, it goes much deeper than that. More to the point, how they do this is the magic, not what they do. It is easy to fall into the same traps of traditional OoO architectures by following the wrong allocation path.

If that is the case, how does Soft Machines avoid a scaling bottleneck? While many of those command decisions, pun intended, are made at the GFE, there are several other points in the pipeline that can dynamically allocate resources. These allocations are on a smaller scale and more focused to the task at hand, but by distributing the allocations and resource utilization, many traditional scaling problems are simply avoided. The devil is of course in the details but as you will see later, Soft Machines has a prototype device and it works so they seem to have those details worked out.

The current Soft Machines cores run their own internal ISA but the company also has a converter layer for x86 or ARM code. This code is not a Transmeta style translation layer, it is more firmware loaded at boot with some hardware hooks that could give the chip a ‘personality’ that mimics another ISA. If you want to run your x86 code on a Soft Machines core, it should be able to do that if you are willing to take a performance per watt hit of undisclosed magnitude. Intel can emulate ARM code for Android without breaking the bank, or battery in this case, so Soft Machines should be able to do roughly the same. In any case the VISC concept is ISA agnostic and could be ported to run any ISA natively.

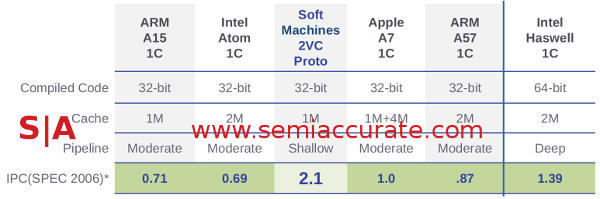

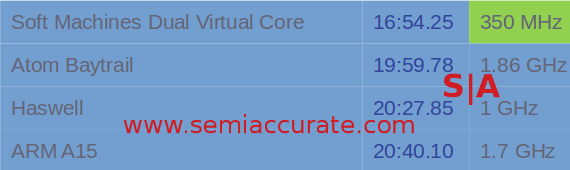

So how does it do in practice? Since Soft Machines has a prototype chip they should have numbers, right? The company gave a demo of that part running the EEMBC RGB-CMYK DENBENCH benchmark against a 2C A15@1.7GHz (Exynos 5250), a 1C Hasswell@1GHz(3550M), and a Baytrail Atom@1.86GHz (Z3470). The Soft Machines Dual V-Core Test chip had 1MB of L2 and won the benchmark as you can see below. Test times are in minutes, lower is of course better.

That is a pretty clear win

At least on this benchmark, the Soft Machines architecture works well. The company claims it will draw between 1/4 and 1/2 the power or run at ~2x the performance of competing architectures across a variety of benchmarks. They showed a wide range of numbers on one slide including SPEC 2000, SPEC 2006, EEMBC DE, Kraken, and of course Dhrystone all against an A15. Needless to say they won by large margin on the low-end, a large multiple in a few cases.

The important thing to note is that the Soft Machines core has a much higher IPC than the others, and by much we mean a multiple. If you look at the test results above, the slowest of the competition is running at 1GHz, the VISC chip was running at a mere 350MHz and finished significantly faster. If only the Soft Machines core could run at clocks of four digits, that would be really impressive.

Ain’t it pretty for a prototype device board?

Luckily for the company, it can, or at least it can run quite a bit faster than the 350MHz it was run at in the demo. Soft Machines would not comment on how high it can go, what the die size was, nor the power draw, but you can make some assumptions based on the benchmark numbers above. It was built on a 28nm process and that is about it, any good size guesses based on the board above? In addition to the core count scaling, if the clocks can climb above 1GHz, Soft Machines’ claims of it being ‘server class’ performance seem quite reasonable. That said performance tends not to scale linearly with frequency, nor does power so where things end up is still an open question.

What is Softmachines going to actually make? They are aiming to license cores and technology, not to make SoCs themselves. They will either make a core for license or allow you to roll your own with their architecture depending on your needs. If they provide the x86 and/or ARM software translation layers, VISC chips could be a very attractive way to go for many mobile SoC maker. More interestingly this does not necessarily compete with ARM or AMD/Intel directly, it could work with them too if the customer does not want to use the Soft Machines ISA. Given the respective ARM, x86, and native Soft Machines software bases, the translation layer is going to be vital in getting VISC off the ground. Then again, this is a topic for IP and licensing geeks, one of which I am not.

So Soft Machines has a new architectural paradigm to dynamically allocate CPU/core resources based on the need of a software ‘thread’. It can hand out those resources to threads with ‘perfect’ granularity, IE from 0-100%, and does so in multiple places along the pipeline. The cores are almost completely vitrualized, not simply the ALUs, and it is done both dynamically and in multiple places. This avoids many traditional scaling problems and looks to miss the painful parts of the perf/watt headaches too. In short it looks very good at the current time. Based on what was said, VISC should hold up at higher clocks and core counts too. Keep an eye on Soft Machines, they appear to be on to something very important.S|A

Charlie Demerjian

Latest posts by Charlie Demerjian (see all)

- ARM ‘cancels’ Qualcomm’s IP license - Oct 23, 2024

- Microsoft Hobbles Intel Once Again - Sep 20, 2024

- What is really going on with Intel’s 18a process? - Sep 9, 2024

- Industry pioneer Mike Magee has passed away - Aug 12, 2024

- What is Qualcomm launching at IFA this year? - Aug 9, 2024