SemiAccurate has been down on Intel’s Xpoint memory and the official briefing changed very little of that. In fact it really only codified our earlier claims that the memory is so far from Intel’s initial claims that it might as well be a different product.

SemiAccurate has been down on Intel’s Xpoint memory and the official briefing changed very little of that. In fact it really only codified our earlier claims that the memory is so far from Intel’s initial claims that it might as well be a different product.

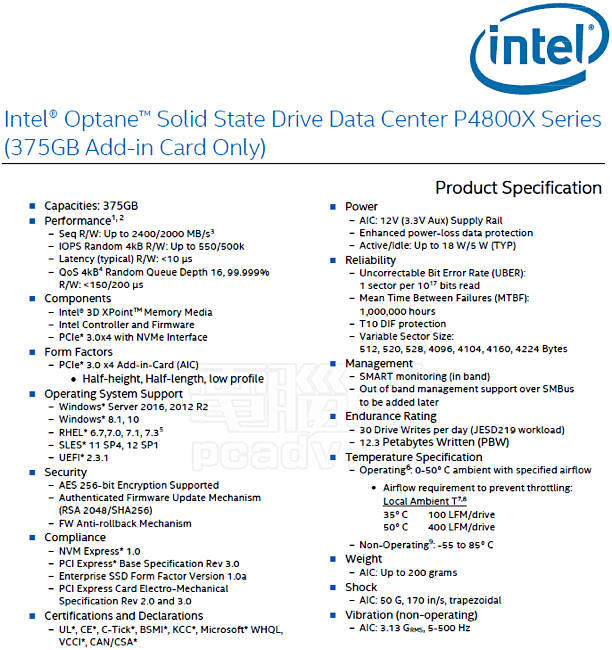

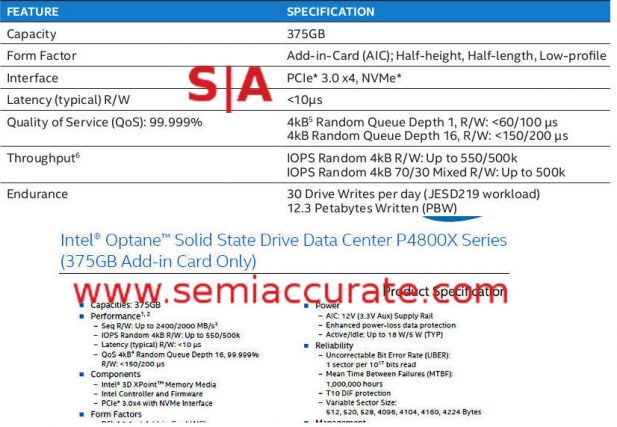

Today we finally have official word on the first Xpoint product, Intel wants to call it Optane but that name is too dumb for us to repeat more than once. That product would be the DC P4800X Xpoint PCIe SSD we told you about last week. The specs are exactly as we said they would be minus a few data points not released in the press documentation, but more on that later. The key points are it is a 375GB PCIe SSD with some very interesting software additions. It is squarely aimed at write heavy enterprise workloads with lots of small random reads and writes. This is the one area where it shines.

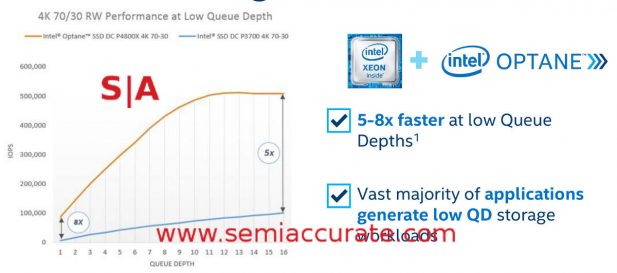

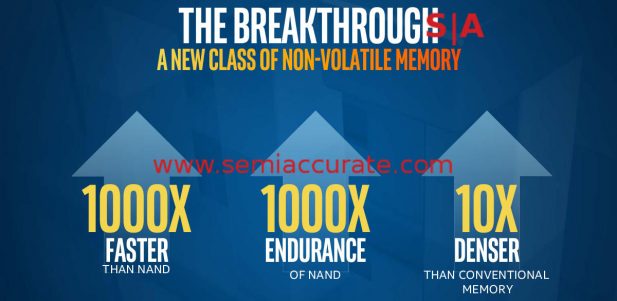

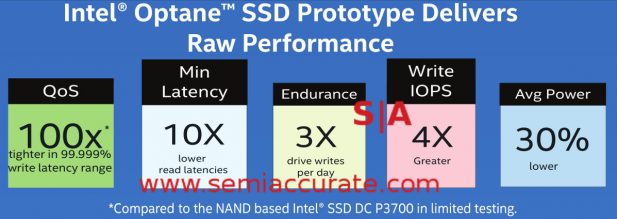

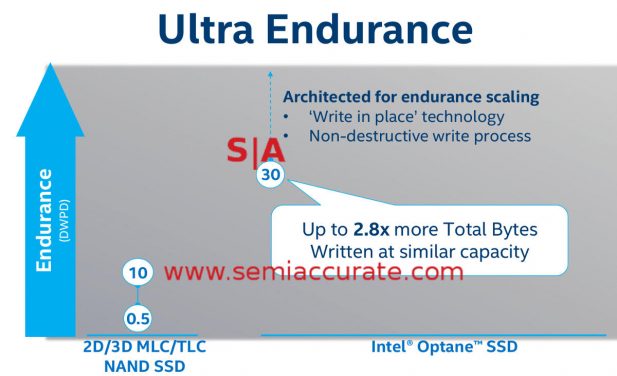

In fact for random reads and writes, it is a truly impressive product, it crushes flash-based devices in that arena, the lower the queue depth the better. The only real problem is that this workload is obviously write heavy, a workload that also needs great endurance. Endurance was a promised Xpoint strength with a claimed 1000x the endurance of flash at launch. That 2015 claim was reduced to 3x in 2016. Today it is officially 2.8x, something we consider close enough to be attainable. Unless you actually look at the numbers on the spec sheet, but since that doesn’t fall under the good news sections, we will just say more on that later. Think happy.

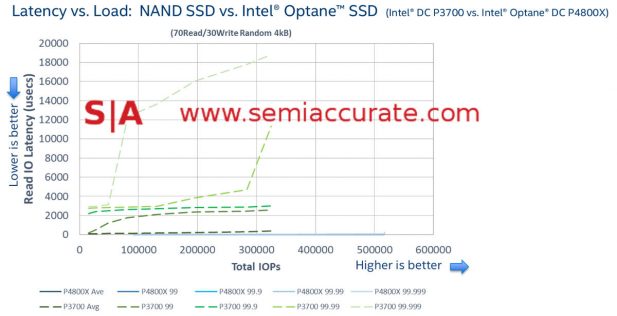

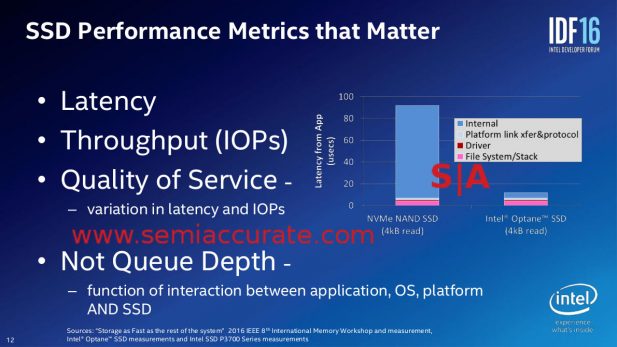

So back to the bright spots, random reads and writes. Xpoint is much more akin to a byte addressable storage medium like RAM than a block addressable medium like NAND, this is a very good thing. It allows an Xpoint drive like the P4800X to absolutely crush any flash-based rival in latency tests, a margin which only grows under high IOPS. If you look at the graph below, the Xpoint drives win by abusive margins on this very important test.

IOPs and latency for Flash and Xpoint

Things look even better for Xpoint when you look at queue depths. Flash does far better performance-wise when queue depths climb because it allows them to hide latency better. This is partly because of the structure of NAND chips and partially because of how flash SSD controllers have evolved in parallel to deal with that structure. Vastly simplified they can send out a request one clock, a second one on clock 2, and so on. Instead of waiting for the response from dog-slow flash cells, they can keep issuing requests. When that request is returned, they can service it while the other outstanding requests are in flight. GPUs and many parallel systems work in similar ways to hide latencies.

Low queue depths shine

Because Xpoint has a very different architecture that is unquestionably better for random reads and writes, it can service requests almost instantly. (Note: This isn’t to say that flash is better or worse, it is just aimed at a different cost/density/speed tradeoff point) As you can see from the slide above, by the time Xpoint gets to a queue depth of 16, it can saturate the bus. Flash doesn’t even get going until the queue depth is much higher. Intel is quite fairly not listing those higher queue depths on the P4800X datasheets because there is no point to it. For low latency, QoS controlled, predictability focused environments where responsiveness is important, Xpoint is the best technology on paper.

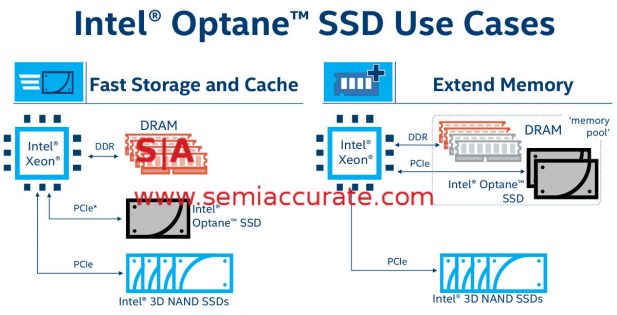

Xpoint use cases

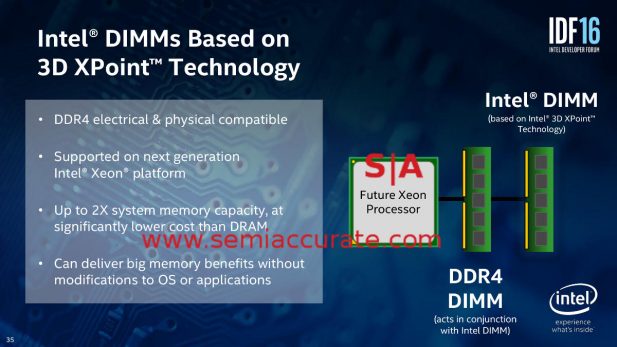

There are a few neat tricks that Intel is adding to the software bag with two main use cases. The first is fast cache and storage, the second is extending memory. As a fast cache the Xpoint memory is a good idea, again if endurance was there, but it is hobbled by the form factor. You might recall when Intel first launched and talked about Xpoint there were two form factors mentioned, 2.5″ SSDs and DIMMs as you can see below. If you are familiar with Intel code names, these were Apache Pass.

Apache Pass from IDF 2016

Xpoint is unquestionably better than flash at this workload because of its much more granular addressing, this alone would be a clean kill on some workloads. In a DIMM form factor coupled to the low latency, high-speed, and width of the memory bus, Xpoint is almost perfect. Unfortunately as SemiAcccurate brought you exclusively last year, latency of Xpoint isn’t there. Apache pass was summarily pulled from the roadmaps long ago with no official explanation leaving the SSD form factor only. Today we know that as the P4800X.

Xpoint and SSD latencies

As you can see from the IDF 2016 slide above the inherent latency of the chips is about doubled by the platform it is on. With a DIMM form factor it would be at least twice as fast, a major advantage for “fast cache” workloads. That said Xpoint is still better than flash in this scenario. One caveat is that for the moment Xpoint is limited to 375GB with 750/1500GB versions coming in Q2 and 2H/2017 respectively. If you can live with a smaller faster cache, Xpoint is a much better fit for this workload than flash.

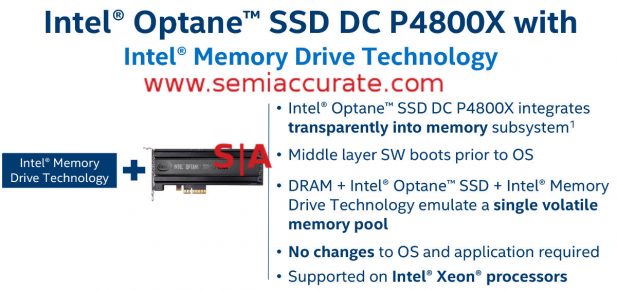

Intel Memory Drive Techology

The second workload, extending memory, is a more questionable fit. If Apache pass hadn’t been pulled from Purley like we exclusively told you earlier, this would be the killer app for Xpoint, in fact it was the very reason we were told it was developed. Remember when SemiAccurate told you about the DRAM-free PC in 2012? Any guesses as to how that demo was meant to be productized? It will be, just later on.

Back to the P4800X story, Intel has a software layer called, “Memory Drive Technology” which effectively maps an Xpoint SSD transparently as memory. Although no details were given out as to how this works, it is basically mapped in the DDIO unit from what our moles tell us. This is very smart and effective but again, the driver stack, SSD controller latency, and PCIe bus traversals take it out of contention from being a memory class device.

This is what Apache Pass was meant for, putting it in an SSD form factor does away with 99% of the advantages. That said for certain very specific workloads, it is a great fit, but the general use case isn’t there because the endurance isn’t even close to the promised number. One very interesting point is that the memory extension use case eats up 55GB of drive capacity leaving only 320GB for memory extension. It also has an unspecified price tag for the moment, make of this what you will.

Intel didn’t specify what the capacity is used for but it is almost assuredly due to massive overprovisioning for endurance concerns, Intel directly stated that overprovisioning for performance is not useful on Xpoint. As it stands now the cells do not have the endurance to survive in such a write heavy use case, it needs those extra sectors to survive. Knowing how a Xeon routes requests to memory or Xpoint at a low-level, SemiAccurate can say with assurance that the capacity is not used for storage of code or firmware. More on how this works later.

Intel provided several use cases where the P4800X and Xpoint in general does shine. Unfortunately those and many other data containing slides in the presentation given to journalists were absent from the official slides given to us days later. Even with this ‘curious’ omission we think there are a few narrow niches where the downgraded Xpoint bearing P4800X and successors can shine if you are comfortable with the endurance specs.

This is where things go from happy and fluffy new product introductions to actual results and what Intel is willing to claim in writing. As you know SemiAccurate has been rather negative about Xpoint of late, a near complete reversal from our original incredibly upbeat stance. This change was due to two factors, Intel’s stunning downgrading of Xpoint’s specs and how they messaged that change. In our first look at the data last year we pointed out, only using Intel’s own data and slides, how Xpoint had seen a 333x reduction in endurance. No we are not joking, that is three hundred and thirty-three times less. Take a look at the slides below from the Xpoint introduction in 2015, IDF 2016, and today, in that order.

2015, 2016, and 2017 for comparison, all Intel slides

For the pedantic technically 3x and 2.8x are less than 1000x so legally speaking, Intel is in the clear. Officially the claims are not inconsistent because one is for chips and one is for drives. If you understand how wear leveling, spare sectors, and the rest go, overprovisioning should not have an effect of this ratio unless Intel intentionally hobbled Xpoint’s endurance enhancing mechanisms. In a device meant for high write workloads, SemiAccurate highly doubts that Intel would do this, and again, more on this later.

So what is the problem? Take a look at the following three slides, one from the DC P3700 SSD’s data sheet Intel compares the P4800X to in their slides. It can be found here. Then there is the same for the P4800X found on the Chinese site Techbang. (Note: The slide in question is watermarked PCadvisor but we can’t find the original) Then note what Intel provided the press for specs.

Three sets of Intel numbers

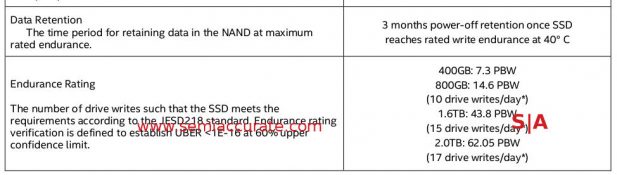

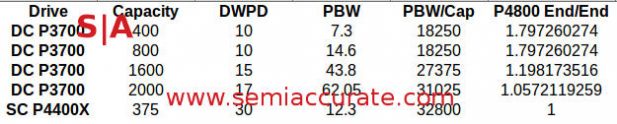

The relevant data point is endurance, something SemiAccurate has been keenly aware of for quite a while now. Since the P3700 series drives are the ones Intel compares the P4800X to in their presentation, it is a fair comparison point to use for endurance claims. If you look at page 16 of the linked data sheet, you will see the following endurance table which requires a bit of explanation.

Intel P3700 endurance from datasheet

When SemiAccurate asked Intel about overprovisioning on the P4800X, we got a response that explained the use of overprovisioning for performance reasons, and quite fairly claimed that the Xpoint drives don’t really need this because of their internal structure, mainly the write-in-place architecture. This is quite accurate but sidesteps the question of overprovisioning for endurance. Intel did give a much lengthier explanation to SemiAccurate about overprovisioning which is reprinted at the bottom of this story, it is worth the read if you are into the technical details.

Back to the Flash based P3700, there are four variants of the drive, 400/800/1600/2000GB, each have differing endurance ratings as you can see above. The reason for this is that flash cells aren’t uniform in performance, endurance, or nearly any quantifiable metric. Flash chips are usually binned with large margins to account for this, think worst case cell plus a margin. For cheap consumer products this margin is small if it is there at all, enterprise drives have substantial margins. Both of these are reflected in the price and warranty.

For endurance there are on-die spare cells, think mostly for repair, and spare sectors on the drive itself for the controller to use in mapping out and remapping dead blocks. In wear leveling and performance enhancing mechanisms, these spare blocks can be used to speed up writes and delay or batch garbage collection. This is what Intel though SemiAccurate was referring to for overprovisioning, a fair interpretation.

Since flash, and most semiconductors for that matter, have performance characteristics which map to a classic ‘bathtub’ curve, the cells that die first because of low endurance are usually small in number. As write lifetimes grow, the rate at which cells die also climbs until it hits the peak. By this time a drive is long bricked. Spare sectors are used to replace bad sectors, ie sectors which have a dead cell that wasn’t replaceable transparently by on-die spare cells.

Because most modern drives will also use these spare cells to enhance performance, this mechanism explains two things. First is why SSDs tend to lose performance as they age, partially due to the cells themselves taking longer to write with wear, and partially because the spare sectors aren’t there to use in overarching performance enhancing mechanisms. This is normal behavoir. The second is that SSDs tend to be fine for a long time and then all of a sudden throw warnings rapidly then brick. This is due to the spare sectors being mapped out with increasing frequency as you climb the bathtub curve. Again normal behavior.

Most flash drives are overprovisioned, for the endurance meaning of the term, to one degree or another. If you look at low-end SSDs they tend to be sold in ‘nice’ computer numbers like 512GB, exactly what you would expect from a multiple of 16 or 32GB flash die. Somewhat counterintuitively, higher performance and endurance consumer drives tend to have capacities that are not ‘nice’ like 500GB or 480GB. Enterprise drives go one further and are even smaller at 400GB or so. This is overprovisioning and spare sectors in action.

If you look at the Intel 750 SSD it has a 400GB capacity when using 16 flash channels with two flash stacks of 16GB/128Gb per channel. This means for an Intel high performance drive it has 512GB on the PCB with 112GB used for overprovisioning, performance and spare sectors combined. SemiAccurate considers this a very good thing and the drive should be both silly fast and have more than enough endurance for consumer uses. It is and it does.

That same PCB and controller when sold as an Intel DC P3700 has all 18 channels filled but keeps the same capacity of 400GB. Doing the math shows there is 576GB of flash on board or 176GB for overprovisioning. According to its data sheet on P11 the SSD 750 is rated for 70GB written per day or a .175DWPD (Drive Writes Per Day) for 5 years for the smallest variant. The DC P3700 with the extra flash is rated at 10DWPD over the same 5 years. Same board, same controller, unlikely to be the same grade of flash, and unquestionably different overprovisioning. That result is not minor, a bit over 57x the endurance.

Going back to the P3700 data sheet you will see that the DWPD numbers go from 10 on the 400/800GB versions to 15 on the 1600GB and 17 on the 2000GB drive. This is the long way of saying overprovisioning for endurance is not linear, not easy to quantify, and goes far deeper than we have time or space to talk about here. That said it is vital but we can’t compare exactly because the SSD 750 and the P3700 almost assuredly use differing flash die or at least lower bins for the consumer model.

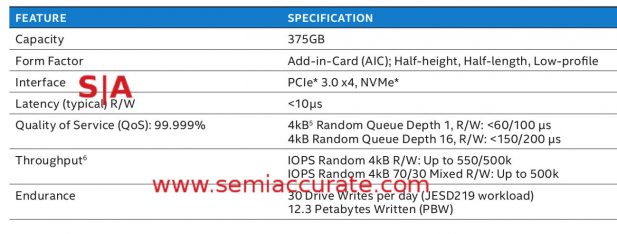

After a few pages of flash and P3700 you would be forgiven for forgetting this article was about the P4800X, Xpoint, and endurance. Going back to the table above, ignore DWPD because it is a somewhat irrelevant technical number, simply (endurance/capacity/rated lifespan/365). Endurance in PBW (PetaBytes written) is the only thing that really matters when you get down to it.

The P3700 data sheet list endurance as 7.3/14.6/43.8/62.05PBW for the 400/800/1600/2000GB P3700 drives. Note how it is not linear with respect to capacity. Dividing this out by capacity gets you the endurance per unit be that GB, cell, or whatever. The official spec for the 375GB P4800X is 12.3PBW and 30DWPD. This is an incredible number right, look at the DWPD number that provided above.

This looks really impressive, 2.8x the DWPD of flash. The slide also directly compares it to the DC P3700 800GB drive which has the same rated endurance as the 400GB but not the same as the 1.6 or 2.0TB versions which are higher. The table below lists the drives, capacities, DWPD, rated endurance, and the endurance/capacity. Notice anything odd? (Note: The last column is endurance of the P4800X divided by the endurance of the drive on that row)

Endurance by the numbers

According to the numbers from Intel’s own spec sheet, (Note: The P4800X spec sheet is the leaked one which SemiAccurate believes to be real and accurate. SemiAccurate asked Intel for the P4800X version of the datasheet but they did not provide it. As of this writing hours after the embargo lift, the datasheet is not available on Intel’s site in Ark or anywhere else we can find.) the P4800X has far less than 2x the endurance of the lowest two P3700s, less than 1.8x. As you go up in capacity the numbers get even worse for the P4800X, it only has 1.06x the endurance of the 2TB P3700. And yes 1.06x is still less than 1000x.

To be fair to Intel we expect the larger capacity P4800X drives to up endurance with capacity in a similar fashion to the P3700 line so this isn’t a best case or worst case scenario, it is simply the only data we have available now. That said given the numbers Intel provided, there is no way the P4800X meets even the lowly 2.8x endurance claim, it doesn’t even hit 2x. 3x is right out and do we need to mention 1000x again?

The back of the P4800X with 14 Xpoint die

Going back to the claim of not being overprovisioned for endurance we have a few other curiosities. The Xpoint die are 128Gb/16GB each, the P4800X drive is 375GB in capacity. Doing the math you get that each P4800X drive has 23.4375 Xpoint die on it. This is either a case of very precision manufacturing to chop up a memory die that closely or the drive is actually overprovisioned. Looking at the back of the PCIe card above you can see there are 14 Xpoint die.

Intel confirmed that the controller is 7 channel with 4 die per channel with 14 die on the front and 14 die on the back. 14 x 2 x 16 = 448. 375/448 = .834 or 16.6% overprovisioning. However you look at it, and whatever you ascribe it to, there is 448GB of Xpoint memory on a P4800X. The overprovisioning percentage is a bit lower than that on the 400GB P3700 which comes out to .694 or 30.6% overprovisioning using the same math as above. Weight this how you will, but it is still massively overprovisioned, on par with the consumer SSD 750.

This is why SemiAccurate said Xpoint is “pretty much broken” endurance is so low it only beats existing flash solutions by the barest of margins. You can claim differences in packaging, controllers, layouts and the rest, but on a purchasable product to purchasable product comparison, Xpoint is effectively on par with flash for endurance, 1.8x best case. Again this is using their own provided numbers and some grade school math, and the exact drives they used in their presentations for comparisons, nothing fancy. Any guesses why Apache Pass was silently pulled from the roadmaps in mid-2016?

Then there is performance which is a bit of a mixed bag, as we mentioned earlier the P4800X shines in write heavy random workloads. The queue depth differences are both immense and very favorable to the P4800X which is why Intel promotes them heavily. Fair enough. For the usual ratings on SSDs, the P4800X does not fare so well.

The same P3700 datasheet above lists sequential R/W performance as 2800/2000MBps with the caveat of performance varies with capacity. This is because some drives don’t fill all the channels and leave performance on the table. This number was curiously left out of the press briefings and materials but again the leaked datasheet put the number at 2400/2000MBps. Any questions about why it was omitted? The rest of the specs line up exactly between the leaked datasheets and the press materials.

Compare and contrast

That said you would have to be insane to buy a P4800X for highly sequential workloads, it is far and away not the best solution for this job. One number that did appear on both sheets is 4K Random IOPS R/W which is 550/500K for the P4800X and a mere 460/175K for the P3700. On writes this is a clean kill for Xpoint, on reads it is just a win. For 70/30 mixed R/W workloads the P4800X comprehensively wins with up to 500K vs up to 265K. Latency, claimed to be “1000X faster than NAND” at the 2015 introduction is listed at <10μs for the P4800X vs 20/20μs R/W(typical) for the P3700. See what we mean by controller, driver, and PCIe traversals negating the benefits of Xpoint?

That brings us to cost, something SemiAccurate has been very curious about. During IDF 2016 Intel claimed Xpoint would cost “up to 1/2 the cost of DDR”. They beat this claim by a lot, a 375GB P4800X will only cost $1520, the cheapest 64GB DDR4 stick we could find was $899 and 128GB LR-DIMMs did not have pricing listed in most places. On the down side a 400GB P3700 on Newegg costs only $695 for the 2.5″ and $899 for the PCIe card. At the 2TB capacity the PCIe card is cheaper, $3499 vs $3612. Intel seems to be charging 2x the going rate of enterprise write focused flash for Xpoint, a number far lower than SemiAccurate expected them to sell for. The real question is what the memory mapping 320GB variant of the P4800X will cost, the 1/2 DDR claim gives you a ballpark.

So in the end, what do we have? About what SemiAccurate said in the first place. Endurance is significantly worse than we stated in our 333x reduction claim, sequential performance is a little worse than flash, but for random workloads, Xpoint really shines. With a 2x price multiplier this will likely relegate the technology to niches, a small fraction of the workloads that Intel intended to sell the SSDs into. According to SemiAccurate’s moles, the Apache Pass DIMM form factor is dead on Purley/Skylake-EP and is now slated for 2018 but most sources append that with, “maybe”.

In light of all this we stand by our earlier assertions that Xpoint memory is not ready for productization because the endurance is not up to the job. If you have any doubts, ask yourself why they had to pull out 55GB more on the memory extender variant to keep it alive for the warranty period, this is not a technology that screams reliability. A memory workload, even one limited by the PCIe bus will blow through the 30DWPD barrier with ease, then think about the numbers a DIMM sees.

Similarly Intel did not allow reviewers to test or get “hands on time” with the P4800X, and were given no opportunity to independently test samples before the embargo lift. This is a radical departure from past practices which SemiAccurate can say after almost 15 years of dealing with the company. Ironically we think this change is to their detriment, we actually think the P4800X would have met their performance claims with ease and there is no way a few weeks would shed any light on endurance or lack thereof.

Ease however does not sum up our feelings about Xpoint. When it was introduced it was meant to be a radical departure from what was, a completely new class of memory. It was the promise of NVRAM made real. The result is nowhere near that, hundreds of times lower performance and endurance that is likely not up to the workloads the drives bearing it are intended for. In short unless you have no other way to service your workloads, you might want to hold off on anything Xpoint, DC P4800X or otherwise, until third-party non-Intel funded endurance tests are made public.S|A

The following is the Intel Xpoint overprovisioning explanation in full and unedited. It is a really good explanation of the processes involved with flash and Xpoint. The author thought paraphrasing the explanation would not make it any clearer. It is presented as given, edited only for formatting.

An Intel® Optane™ SSD is not “overprovisioned” in the same sense of a NAND SSD. The random write performance of a NAND SSD can be increased by increasing the spare capacity percentage, as this makes defragmentation more efficient. Since 3D XPoint™ memory media is a write-in-place media there is no defragmentation needed, and therefore no performance can be gained in an Intel® Optane™ SSD by increasing spare capacity. With that said, both NAND and Intel® Optane™ SSDs must store ECC and metadata to maintain low error rates throughout the life of the drive, and meet the demanding needs of enterprise customers.

Additionally, there are significant differences in the 3D XPoint™ memory media and NAND media. For example, NAND media includes significant extra capacity inside every die for sparing out blocks, as well as managing ECC code words. 3D XPoint™ memory media does not have such extra capacity. Given these media architectural elements, the resulting SSD architecture and “overprovisioning” cannot be considered in the same way one would consider “overprovisioning” or spare capacity for a NAND based SSD.

As to specifics of the Intel® Optane™ SSD DC P4800X: the controller is a 7 channel controller, and performance is best with an even die to channel loading. For the 375GB drive, we have 4 media die per channel, or 28 die total. Spare capacity beyond the user capacity is used for ECC, firmware, and ensuring reliability meets the high standards customers expect of an Intel data center class SSD.

Charlie Demerjian

Latest posts by Charlie Demerjian (see all)

- Qualcomm Is Cheating On Their Snapdragon X Elite/Pro Benchmarks - Apr 24, 2024

- What is Qualcomm’s Purwa/X Pro SoC? - Apr 19, 2024

- Intel Announces their NXE: 5000 High NA EUV Tool - Apr 18, 2024

- AMD outs MI300 plans… sort of - Apr 11, 2024

- Qualcomm is planning a lot of Nuvia/X-Elite announcements - Mar 25, 2024