![]() During AIS, LSI gave an interesting talk about the trends in shrinking flash memory, and the problems it creates. Contrary to normal semiconductors, smaller cells mean bigger problems for flash.

During AIS, LSI gave an interesting talk about the trends in shrinking flash memory, and the problems it creates. Contrary to normal semiconductors, smaller cells mean bigger problems for flash.

You may be familiar with some of the problems with flash, the most common one is write endurance. If you think of each flash cell as a tiny capacitor, shrinking it gives you less space to hold electrons, the layers holding in the electrons get thinner, and the space between cells lessens. That means with each write, the degradation, as a percentage, is higher so write lifetimes decrease as well.

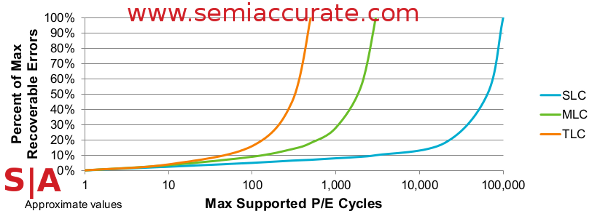

LSI says that the flash cells of 2004 held about 1000 electrons, today’s only hold about 100. Worse yet, old SLC flash was usually rated at 100K write cycles, today’s common MLC is around 4K cycles, and the newer TLC flash dies after 500 or so writes. All of these are what you might call a big problem.

You can see the problem

To make matters worse, with increasing density of chips, the number of I/O channels in a device goes down too. The example LSI used was a 128GB SSD, three years ago it took 32 4GB die to make one, now it only takes 8 16GB die. This means that the number of channels that can be simultaneously accessed goes from 32 to 8 as well. While this does mean potentially cheaper controllers, it also means bandwidth craters too, as does the ability to service parallel operations from a long queue of I/O operations. The net result is speed plummets, as do random I/Os.

If this doesn’t seem bad enough, older SLC (Single Level Cell) flash had two states, 1 or 0. If you held 1000 electrons, you had a fair number of them to play with per level, and a large range before you were in a grey area. With MLC (Muli-Level, aka 2, Cell), you have four states to deal with, so 250 electrons per cell, and TLC (Three Level Cell) has 8 states, or 125 electrons per. Unfortunately for flash makers though, those 1000 electrons of yesteryear are now 100, so a MLC cell has 25 electrons per state, and TLC has 12.5. Needless to say, you can’t count electrons individually with any accuracy, so there has to be some margin of error, and that grey area occupies a very large percentage of the valid data range. That drops the usable electron count notably as well, binary ones and zeros are close to a thing of the past.

Shrinking cells also mean they are closer together, thus more susceptible to interference from each other. Without going in to detail, LSI lists some as, Inter-Cell Interference when programming, Read Disturb when one page affects its neighbors, Leakage, and Charge Trapping/Oxide Breakdown. These problems and their causes should be fairly self-explanatory, all you need to realize is that with each shrink comes more serious problems. Read times go up about 4x from SLC to TLC, and page program times goes up 3x too. Smaller is not faster.

That said, shrinks are not all bad, cost goes down with each one too. Going from 65nm SLC to 22nm TLC gives you not just an 8x size decrease, but you also get 4x the data per cell. This means a 32x increase in capacity per mm of silicon used. Cost per mm does go up with each smaller node, but the end result is radically cheaper storage per bit, and that is quite possibly the most important metric.

To make all of this work right, the flash makers have to change some things, and so do the controller makers. One of the big problems that both need to address is readable electrons per cell. With tens of electrons to read eight discrete levels from, error correction is becoming an analog affair, not a purely digital one, and algorithms need to be much more complex as a result. This is still an ongoing debate in the the industry.

Another problem is error recovery areas being static. Currently, a portion of the chip, a number of chips, or both, are dedicated to remapping errors. This means when a cell goes bad, that address is permanently remapped to one of these unused dedicated areas and life goes on. As a flash chip gets older and has more writes, the rate of cells dying goes up, and more and more of the remapping area gets used. When it runs out, the chip is no longer viable.

With hard partitioned remapping areas, wear leveling algorithms are somewhat limited. If you can partition them on the fly, instead of sitting unused until they go in to full service, they could become part of the wear leveling scheme from the first write. Letting manufacturers set their own schemes opens up a wide range of options for flash, and potentially allows them to be creative. Once again, it is an area of discussion and debate among the interested parties, nothing is settled.

There are lots of new technologies on the horizon to tackle these problems, so all is not lost. From DSP-like algorithms to partial page writes, there is no shortage of ideas to tackle the impending problems with. Things are getting much more complicated, and changes are coming ever faster. If nothing else, it is a good time to be a flash engineer, but you might lose your hair faster too.S|A

Charlie Demerjian

Latest posts by Charlie Demerjian (see all)

- Qualcomm Is Cheating On Their Snapdragon X Elite/Pro Benchmarks - Apr 24, 2024

- What is Qualcomm’s Purwa/X Pro SoC? - Apr 19, 2024

- Intel Announces their NXE: 5000 High NA EUV Tool - Apr 18, 2024

- AMD outs MI300 plans… sort of - Apr 11, 2024

- Qualcomm is planning a lot of Nuvia/X-Elite announcements - Mar 25, 2024