![]() Just before CES, Nvidia announced Tegra5 aka K1 in T40/A15/Logan guise complete with their normal specious set of numbers. If you look back at their history, the patterns of misdirection are clear, as is their use of gullible press.

Just before CES, Nvidia announced Tegra5 aka K1 in T40/A15/Logan guise complete with their normal specious set of numbers. If you look back at their history, the patterns of misdirection are clear, as is their use of gullible press.

The claim was simple, T40/K1/Logan beats everything outright and does it in a very power efficient manner. To illustrate this point Nvidia put up a slide during their press conference to show that the K1 beats the almost nine year old XBox 360 and PS3 duo, and does it using only 5% of the power. Better yet it beats Apple’s new A7 and Qualcomm’s Snapdragon 800. Sounds great, right?

Ignoring the fact that Nvidia compares a chip that is not released yet to consoles with an HDD, optical disk, and lots of other power draining components, it still is a great hurdle to achieve, right? As long as you don’t actually look back at their claims around Tegra 3, say in the first 20 seconds of this video at which point the claims look a bit recycled. Yup, Tegra 3 was also claimed to be on par with the 360 and PS3, and it too did it in far less power. At least Nvidia came up with a new PR idea to promote K1, make a crop circle for the new chip. Doh!

Back to the story at hand, Nvidia has a long history of making performance claims not backed up by power numbers, then allowing tame press to test only one facet of the claim. The idea is to use the credibility of the site or journalist to infer that the one verified number means the rest of the company’s claims are true too. It is a classic case of picking the phrasing of a statement to be technically accurate but completely misleading.

The way Nvidia does it is to say that a chip can reach a performance target of X in scenario A and then say it has a power draw of Y in scenario B. Both statements are true but both statements are not true at once. The wording goes like, “We beat the XBox 360 in FPS, and in this phone, we draw only 3W!”. These statements are not connected but if the press are gullible enough not to think about what is being said, Nvidia will never step in to correct an article that says XBox 360 performance in 3W.

Such tricks tend to work with a site once or twice, then they start demanding real proof or to run non-rigged demos so their credibility doesn’t get hopelessly tarnished. Nvidia then moves on to the next site and quietly offers them an exclusive looks at a hot new device. This works really well, and there are enough sites that won’t question an exclusive to last for a few more decades.

Take a look at how this was done with the Tegra 4 launch at MWC 2013. Anandtech got an exclusive on it and had some impressive numbers to share. Nvidia would not allow Anand to measure the power used to get those peak numbers, nor was he told what they were. The conclusion was hopeful but not unusually optimistic. Only much later was it revealed that the demo was pulling over 10W, beating the competition by large margins by using a large multiple of their power is not a difficult task.

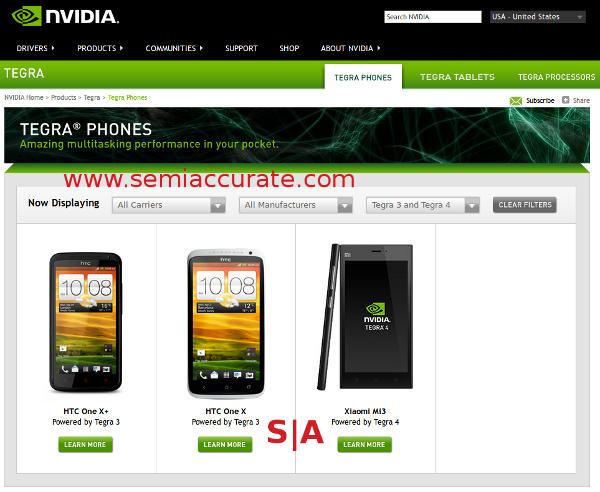

Nvidia’s complete listing for Tegra 3, 4, and 4i phones

When Tegra 4 devices were released months later, it was clear that the power use was a deal-breaker for phones. Nvidia’s own promotional pages lists only one device powered by Tegra 4 10 months later, and SemiAccurate’s sources tell us it was a purchased design win. The early ‘preview’ numbers were nowhere near the end result in any shipping form factor, no mobile device could bear that much power use. In short, the demo was so skewed that the best you can say was that Nvidia was using Anand’s credibility as a proxy tool to deceive.

Stepping forward a few months and Nvidia again gave Anand another preview, this time for the upcoming Tegra 5/Logan part. Please note this was before the K1 naming scheme was trotted out at CES, but it is the same part. The numbers were impressive on the surface, performing the same as an iPad4 GPU with about 40% of the power use. Better yet it could scale much higher too so it should handily beat Apple in all regards, that is unless you actually look at the information given and ask a few basic technical questions.

At the time of that test, Logan was unreleased and in fact still is unreleased. The Apple A6X was over a year old and months away from replacement, the A7 came out about 1Q later and again according to Anand, is much faster. Better yet the A7 hits that performance in a very thermally constrained form factor, a small phone, and it still beats the A6X in a far less thermally constrained tablet form factor. Since that release, Nvidia is claiming an ~2.5x performance lead over the A7, anyone have any idea why TDPs aren’t listed on the slide? Must have been overlooked in the rush to CES. They are listed as 5W on a different slide, but is it the same test rig?

Going back to the July numbers, Anand showed K1 pulling 900mW vs the A6X’s 2.6W at the same performance level. Assuming the power rail tapping was done correctly, that is a clean kill for Nvidia right? A claimed 5x performance lead, and then a claimed ~1/3 power use, heady stuff. Remember what we said about presenting two numbers and letting the press blur the wording?

Beating an end of life part with a prototype that is a year from release is not a hard trick in the semiconductor world. Beating the new A7 with an unreleased part with unspecified power draw on one side is not a trick either, especially without power numbers. Beating Apple at the same power draw would be quit a trick, but for some reason that test is not shown either, mainly because the tests that Nvidia showed were set up to be purposefully deceitful.

Think about overclocking, if you double the power a CPU or GPU uses, the performance only goes up by a little, it is a non-linear relationship. Similarly with underclocking you can drop the power a lot and only lose a little performance. In essence if you halve the clocks, you can pull half the power, usually a lot less in most cases. In the power demo, Nvidia downclocked the K1/Logan until it hit the performance of the A6X, essentially massively underclocking it. If Anand’s claims are true, the chip in that demo should be pulling a small fraction of the GPU power it would at full clocks, it is a non-linear relationship.

If Nvidia were to do the same demo by using a K1 with some fully clocked shaders fused off until it hit the same performance numbers, any guesses as to how the power numbers would have looked? Any guesses as to how the economics of a Logan/K1 will end up with 5x the shader area running at 1/5th clocks? By the time the K1 comes out, not only will the A7 be on the verge of replacement, but the less thermally constrained A7X will probably be out too. A K1 at 10+W will still trounce it, but like the Tegra 3 and Tegra 4 before them once they are in devices with similar thermal constraints, Nvidia’s parts fall flat because of basic physics. The Logan demos were rigged and shown to someone who Nvidia knew wouldn’t question the results presented.

Now lets get back to K1 and CES. The numbers Nvidia presented were nothing short of amazing, they beat the XBox 360, the PS3, and the A7! They also did so with a chip of unspecified wattage, the 5W number was a typical nebulous claim that you should be quite skeptical of. No such wattages were given for the A7 comparison for reasons you will understand in a bit.

Word has it that several outlets were offered exclusives of the K1 at CES under circumstances that made them ask questions. As soon as this happened, the offer was withdrawn and others were contacted. SemiAccurate has several names who had that offer rescinded when they demanded full disclosure, and the author will pat them on the head during the next conference for their honesty.

Luckily that didn’t stop Tom’s Hardware from getting numbers on a Lenovo ThinkVision 28 device powered by a K1. It was compared against an Apple A7 in an iPhone 5S, a Qualcomm Snapdragon 800 in a Nexus 5, and an EVGA Note 7 with a Tegra 4. Please do note the size of the devices, the Apple and Qualcomm parts are all in phones, the older Tegra 4 is in a tablet with over twice the screen/device footprint, and the Tegra 5 is in a 28″ screen form factor.

Ironically even in this large device the K1 is thermally constrained, is running nowhere near full clocks. This is likely due to depth of the ThinkVision 28, it is hard to fit a full clocked Tegra K1 in such a device because of the cooling constraints. How do we know? Take a look at the Nvidia K1 NDA demo setup at CES pictured below.

That is not a 5W heatsink, think more than 2x that number

The heatsink on the K1s that Nvidia was showing in private was about 2 x 2 x .4 inches, a bit large for a 5W part don’t you think? In fact it is a bit large for a 28″ AIW device at least as far as Z/height goes, Lenovo can’t cool the 2.3GHz variant in the depth allowed. That should give you a very good idea about how much power a 2.3GHz K1 takes under load.

So can the K1 beat a pair of nine-year old consoles? Sure, but a Tegra 3 was claimed to match the same pair too. Can the Tegra K1 handily beat an A6X and an A7? Sure. Can a K1 beat the competition be it Apple or Qualcomm in the same power envelope? I will leave that for you to figure out, but do note the lack of any power numbers at full clocks in any of the presentations. Also note the increased skepticism of the message bearers over time, it goes hand in hand with the messages being delivered.

For the same reasons that the ‘overwhelming performance’ of the Tegra 4 ended up with one phone on the market a year after release, the Tegra 5 will have a similar success story. It can do big number at unspecified wattages, but so can the Apple A7 and the Qualcomm Snapdragon 800. The difference is that neither company needs to show those numbers, their performance in power constrained form factors is more than adequate, not to mention both are on the market today. Nvidia on the other hand doesn’t give out power, but may someday when the device is released.S|A

Have you signed up for our newsletter yet?

Did you know that you can access all our past subscription-only articles with a simple Student Membership for 100 USD per year? If you want in-depth analysis and exclusive exclusives, we don’t make the news, we just report it so there is no guarantee when exclusives are added to the Professional level but that’s where you’ll find the deep dive analysis.

Charlie Demerjian

Latest posts by Charlie Demerjian (see all)

- What is Qualcomm’s Purwa/X Pro SoC? - Apr 19, 2024

- Intel Announces their NXE: 5000 High NA EUV Tool - Apr 18, 2024

- AMD outs MI300 plans… sort of - Apr 11, 2024

- Qualcomm is planning a lot of Nuvia/X-Elite announcements - Mar 25, 2024

- Why is there an Altera FPGA on QTS Birch Stream boards? - Mar 12, 2024