AMD’s Trinity CPU is one mutt of a chip, with paradigms taken from a lot of recent CPUs. The sum of the parts all add up to a very interesting whole that transcends any one piece.

AMD’s Trinity CPU is one mutt of a chip, with paradigms taken from a lot of recent CPUs. The sum of the parts all add up to a very interesting whole that transcends any one piece.

On paper, Trinity is not a very unusual part, it looks like a 4-core Bulldozer variant with 384 VLIW4 shaders. A mildly tweaked CPU with a middling core and last gen GPUs is exciting? Actually yes it is, because Trinity is about the careful use of parts that add up to a coherent whole, not an amalgam of disparate parts and duct tape. While there are no big bangs, there is a lot of little improvements coupled with the elimination of many bottlenecks, all done with an eye on efficiency. Trinity is a damn good chip.

Lets look at Trinity, and start off with a little homework. As of now, five Trinity variants have been released, all mobile. The reviews have run from average to pretty good, depending on what is being looked at, with gaming and consumer uses faring far better than raw CPU workloads. Battery life was also a lot better than expected in sample notebooks. To sum up Trinity in a sound bite fashion, it is an energy sipper that is good for games and consumer apps, but loses in HPC workloads, about what the pre-release information telegraphed.

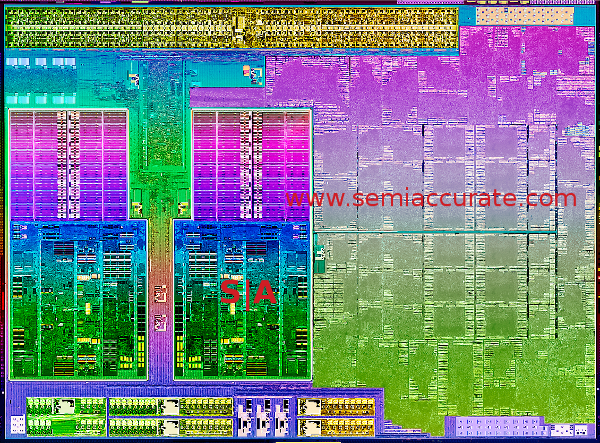

Trinity die shot

Trinity may be the spiritual successor to Llano, but it shares much more in common with Bulldozer. Both of these are at least a generation behind Trinity in many critical ways. If you are not very familiar with the architectures of both chips, you might want to read up on the four Bulldozer pieces on SemiAccurate (core, uncore, problems, and more problems) and the Llano core and uncore pieces.

On paper, Trinity is just two tweaked Bulldozer modules, the Bulldozer System Request Queue (SRQ)/Unified North Bridge (UNB), the Llano CPU <-> GPU busses (Onion and Garlic), and few other bits all crammed in to an overall Llano-esque layout. All of these pieces are massively updated, and incorporate a lot of learning since their introduction in Bulldozer and Llano. The polishing process seems to have done wonders for the overall functionality and cohesiveness of Trinity, it is more than the sum of its parts.

From Bulldozer to Piledriver:

The first question on most people’s mind is, did the Piledriver core fix the problems of Bulldozer? Short answer, yes and no. Piledriver has undoubtedly improved Bulldozer, the rough edges are completely ironed out, but not everything is ‘fixed’. Intel’s Sandy Bridge and Ivy Bridge cores are not going to be threatened by anything AMD does for single threaded performance, nor will overall throughput be a challenge. That is the no, AMD still loses badly to *Bridge on raw CPU performance. Then what did AMD do right?

As we suspected in the Bulldozer pieces, AMD has lots of things that were not exactly what they should have been in the core, and those were all tweaked a little here, a little there for Piledriver. Buffers were enlarged, data paths widened, and things generally flow better.

This is something that you can’t do until you have a chip in hand to test, simulation only goes so far and so fast. For each of the 10 major modifications in the core, there are dozens, perhaps hundreds of little improvements over Bulldozer. They all add up to more than 10% IPC improvements, and that is before frequency gain and power efficiency are taken in to account.

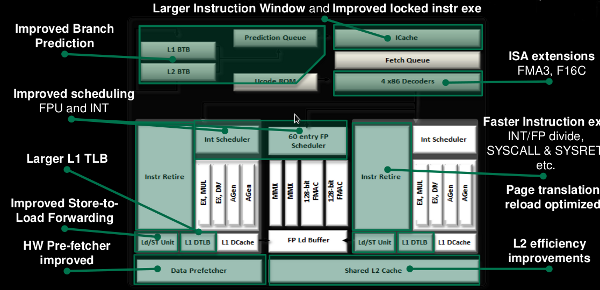

The overview of Piledriver changes

The first part of the core is one of the most important, the branch predictor. If you recall in our Bulldozer core article, that chip decoupled prediction logic from fetch logic, and used a two-stage, possibly more, prefetcher. These specifics still rate a ‘no-comment’ from AMD architects, but we don’t see any reason for this to have changed radically. It is listed as improved, not thrown out and completely redone.

ICache shows a similar mild tweaks, with a larger instruction window, something that we strongly suspected was going to happen once Bulldozer silicon came back. These seemingly minor changes can mean the difference between execution units being fed and tens or hundreds of cycles wasted waiting for memory. Buffers are there to keep units from stalling, and just a little short can be catastrophic to overall throughput.

Both of these changes can seem really minor, but a few tenths of a percent change in accuracy here can result in massive performance gains. Same with the ICache, every time you have to wait on memory, you potentially idle the CPU for minimum of a dozen cycles. .1% better * 100 cycles wait is a pretty substantial gain, or at least the avoidance of a massive loss.

The last of the front end changes are relatively minor, the ISA changes. This isn’t an improvement in the sense of things executing faster, it just supports more functions. These functions, if used, can be more efficient that doing the same work via many chained instructions, or worse yet, microcode. If software supports it, Trinity will benefit, but it is very code dependent.

The Piledriver core adds AVX, AVX1.1, FMA3, AES (aka AES-NI in Intel-speak), and F16C. Bulldozer added FMA4 to the instruction set, that means Fused Multiply Add with 4 operands. Intel then added a far less efficient FMA3 to Haswell rather than supporting FMA4. Luckily, FMA3 in Piledriver is in addition to FMA4, not a replacement for it, and the XOP instructions are still unique to AMD. Like any new ISA, if you use them a lot, they will be a benefit, but older code doesn’t even know they are there. Whether or not this is a gain is viewer dependent.

One persistent worry that does not seem to be addressed is the lack of x86 decode bandwidth. As you can see, Bulldozer could decode four x86 ops per clock, and so can Piledriver. A quick glance at the execution pipelines shows that both integer cores can execute four instructions per clock. So can the FP unit, for a total of 12 instructions potentially needed per clock.

This is fed by a unit that can supply four decoded instructions per clock. See a problem? There is a lot more to this than just 12 – 4 = 8 instructions per clock less than desired though. Decode does mean one x86 instruction going to one Piledriver instruction, it could be one to many, or one to one, x86 is not a clean or sane ISA. On top of this, there is macro-op fusion, so the math is far murkier than the above mess.

Piledriver still ends up shorted for instructions, there is more execution capability than decode, but the improvements to the front end mean the gap is considerably smaller on the second generation core. It is a pain point that won’t be properly addressed until Steamroller …. err…. rolls out next year. Minor changes to this front end have disproportionate impact to the entire core performance though, and now you know a bit more about why. Major changes are in the pipe though, don’t worry.

Moving on to the instruction schedulers, both INT and FP are listed as improved, but given the disparity of the incoming data stream, this is likely to only affect single threaded code that is heavily populated by either INT or FP instructions, not mixed instruction streams. That said, AMD needs all the single threaded performance it can get, so these changes are welcome.

The L2 efficiency improvements are similar to the ICache window, a little faster, a little less stalling, all adds up. Same with the larger L1 TLB, less latency on MMU operations means more speed, less stalls, etc. Sounding like a broken record? There are few big bang gains in a modern OoO CPU, and anything big is the result of many little gains combined in to one overarching name.

Faster instruction execution on divides is a similarly good thing, but unlikely to make a big difference in overall code throughput. If you have divide heavy code, it will fly, but the general use case is a small improvement. Again.

Page translation reload optimizations are similar, and probably related to the TLB gains. If you can shave a cycle or two off the majority of cases here, this is a clear win in a modern multi-threaded software environment. Moving between pages in different memory spaces is common enough to be a useful improvement, but once again, not a performance killer if it isn’t updated.

Same with the improved Store-To-Load forwarding. This is the technical way of saying if an operation stores a result to memory, and the next operation needs it, instead of reloading it from cache, or worse yet main memory, it just pulls it from a magic buffer or queue that AMD won’t extrapolate on. This could potentially save tens or hundreds of cycles worth of stalling when it hits, but good code should avoid it in the first place. Then again, most Trinity’s will be running Windows, so we won’t talk about good code.S|A

Note: This is part one of a series, Part 2 will be linked here when published.

Charlie Demerjian

Latest posts by Charlie Demerjian (see all)

- Qualcomm Is Cheating On Their Snapdragon X Elite/Pro Benchmarks - Apr 24, 2024

- What is Qualcomm’s Purwa/X Pro SoC? - Apr 19, 2024

- Intel Announces their NXE: 5000 High NA EUV Tool - Apr 18, 2024

- AMD outs MI300 plans… sort of - Apr 11, 2024

- Qualcomm is planning a lot of Nuvia/X-Elite announcements - Mar 25, 2024