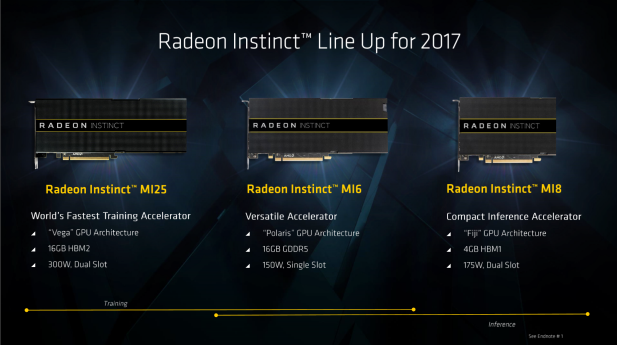

Starting in Q3 AMD’s Radeon Instinct line of server accelerators will be available to OEM partners. First announced late last year, Radeon Instinct products are tailored for use in compute intensive applications like machine learning and HPC. AMD’s offering three models: the MI6 based on Polaris 10, the MI8 based on Fiji, and the MI25 which uses AMD’s new Vega 10 chip. Despite the use of older chips like Fiji and Polaris, AMD held back on launching the Radeon Instinct product stack until now to coincide with the Vega launch.

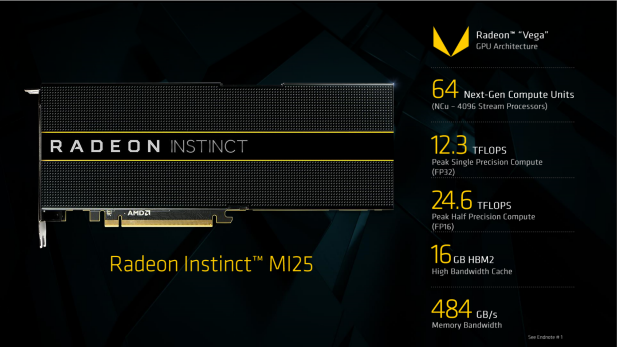

With the Radeon Instinct MI25 AMD’s now offering 24.6 TFlops of half precision compute, 12.3 TFlops of single precision, and 768 GFlops of double precision compute to its customers. This is all in a 300 Watt passively cooled dual slot form factor with 16 GBs of HBM2 memory clocked at 945 Mhz on a 2048-bit memory interface for a total of 484 GB/s of memory bandwidth.

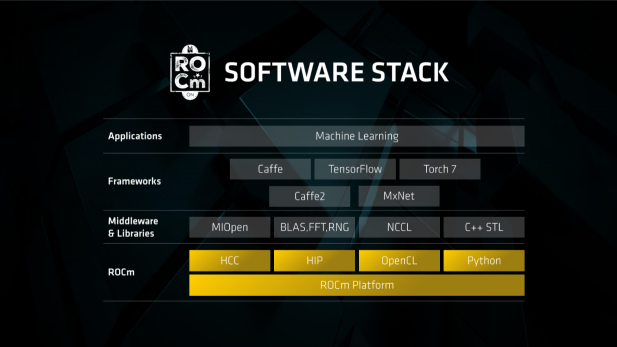

Of course while the technical specifications of AMD’s server accelerators are interesting, they’re of rather limited importance given the software support gap that AMD is working on closing. In late 2015 AMD decided that it was time to get serious about GPU compute with the Boltzmann Initiative. That effort has since morphed into the ROCm open compute platform that AMD’s been pushing to its customers as part of the GPUOpen effort. If you check out the ROCm Github page you can see that while AMD doesn’t appear to have many people making commits they are pretty active and most of the ROCm tools have received updates in the past two months.

From a hardware perspective AMD’s going in an interesting direction by offering accelerators designed to address different price and performance segments of this market. NVIDIA’s chosen to offer just two chips in this space: P100 and GP102. Part of that difference might because NVIDIA’s chips were designed specifically to corner this market whereas some might argue that AMD’s offerings just happen to be capable of servicing it.

It’s worth noting that NVIDIA’s quarterly budget for GPU research and development is larger than AMD’s yearly budget for both CPU and GPU research and development. Given these differences in resources and current market share the Radeon Instinct MI25 represents a significant opportunity for AMD provided that they can convince machine learning folks that ROCm is a viable platform for their work.

AMD also has one significant point of technical differentiation in this market segment: support for hardware SR-IOV. As Microsoft describes it, “SR-IOV allows a device, such as a network adapter, to separate access to its resources among various PCIe hardware functions.” Essentially this technology allows for the virtualization of GPUs with minimal overhead. If you’re a cloud provider and you’re looking to offer virtual desktops or just raw GPU instances AMD’s support for SR-IOV is a helpful feature. It’s also a positive that this is a standards based technology rather than a replication of NVIDIA’s proprietary approach to GPU virtualization with GRID.

To support this launch the ROCm 1.6 release is set to go public on June 29th. This update brings with it the MIOpen 1.0 compute library which enables support for machine intelligence frameworks including planned support for the Caffe, TensorFlow, and Torch machine learning frameworks.

A little bit over a year ago I was working on an article detailing how AMD had missed the machine learning boat. That article was never published, but it’s amazing how this story has evolved since then. Back then ROCm was immature, there weren’t any products, and AMD didn’t have a story to tell about how it fit into this market. All of those issues have now largely been addressed. ROCm still has a ways to go and NVIDIA still owns this market segment, but for the first time AMD’s shown up for the fight. Let’s see what Radeon Instinct can do.S|A

Thomas Ryan

Latest posts by Thomas Ryan (see all)

- Intel’s Core i7-8700K: A Review - Oct 5, 2017

- Raijintek’s Thetis Window: A Case Review - Sep 28, 2017

- Intel’s Core i9-7980XE: A Review - Sep 25, 2017

- AMD’s Ryzen Pro and Ryzen Threadripper 1900X Come to Market - Aug 31, 2017

- Intel’s Core i9-7900X: A Review - Aug 24, 2017