Calxeda has two big pieces of news at Computex, three new ODMs and a new card format. The ODMs focus on storage, the cards on flexibility for the ODMs so there is an actual link between the two.

Calxeda has two big pieces of news at Computex, three new ODMs and a new card format. The ODMs focus on storage, the cards on flexibility for the ODMs so there is an actual link between the two.

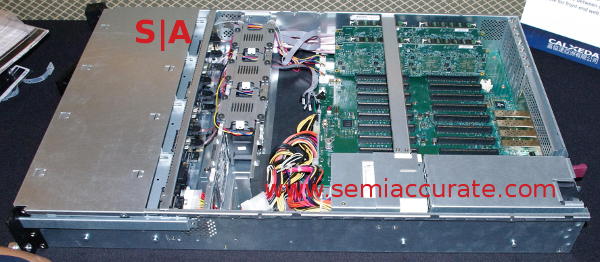

First up is the three ODMs that are now showing Calxeda systems, Aaeon, Foxconn, and Gigabyte. Aaeon had a two node 10 drive 1U storage server while Foxconn showed off a 12 node beast capable of supporting 60 drives. In the middle of the road was Gigabyte with a hybrid device that could have up to 48 Calxeda nodes with 12 3.5″ drives. It looks like this.

Gigabyte Calxeda server with two CPU nodes

More importantly, far more importantly are the two new cards that between them rethink the whole Calxeda building block paradigm. If you recall when Calxeda first unveiled their EnergyCore SoCs there were two on a card with all the I/O thought the bottom connector. The two sockets had no more in common with their board mates than any other socket in the system. This allowed the programmable interconnects to be arbitrarily assigned anywhere.

The new nodes, two CPU and one management

The new cards change this in two significant ways. First is the two sockets on a card are directly linked to each other so one link is hard assigned on the card. Additionally, SATA which used to have physical ports on the PCB, is now routed out through the bottom connector to the board. This simplifies cards and vastly adds to storage flexibility, you no longer need a storage device or two per blade.

Better yet a few more technical changes allowed Calxeda to pull most of the backplane functionality on to the card so the backplane goes from active to passive. What used to be a complex device is now just traces, the nodes do all the heavy lifting so OEMs can focus their attention on things that will actually differentiate their offerings instead of redoing the basics. This also allowed Calxeda to route a few of the inter-node connectors off the back of the backplane to connect multiple chassis. For the type of customer looking at Calxeda, this is a killer feature.

Another simplification is chassis management no longer is on the backplane, it is now on a separate card. Coupled with the ability to interconnect chassis you can now put one management card in per logical configuration regardless of how many chassis you configure that to be. Data center trolls love this kind of stuff, it makes their lives easier.

In the end Calxeda has effectively revamped their entire architecture on a physical level but didn’t have to change the silicon. These changes seem to be the right ones, they simplify designs, lower cost, speed time to market, and add flexibility. At least three big OEMs seem to think it is a good idea and they don’t tend to jump on things without a market behind them.S|A

Charlie Demerjian

Latest posts by Charlie Demerjian (see all)

- What is Qualcomm’s Purwa/X Pro SoC? - Apr 19, 2024

- Intel Announces their NXE: 5000 High NA EUV Tool - Apr 18, 2024

- AMD outs MI300 plans… sort of - Apr 11, 2024

- Qualcomm is planning a lot of Nuvia/X-Elite announcements - Mar 25, 2024

- Why is there an Altera FPGA on QTS Birch Stream boards? - Mar 12, 2024